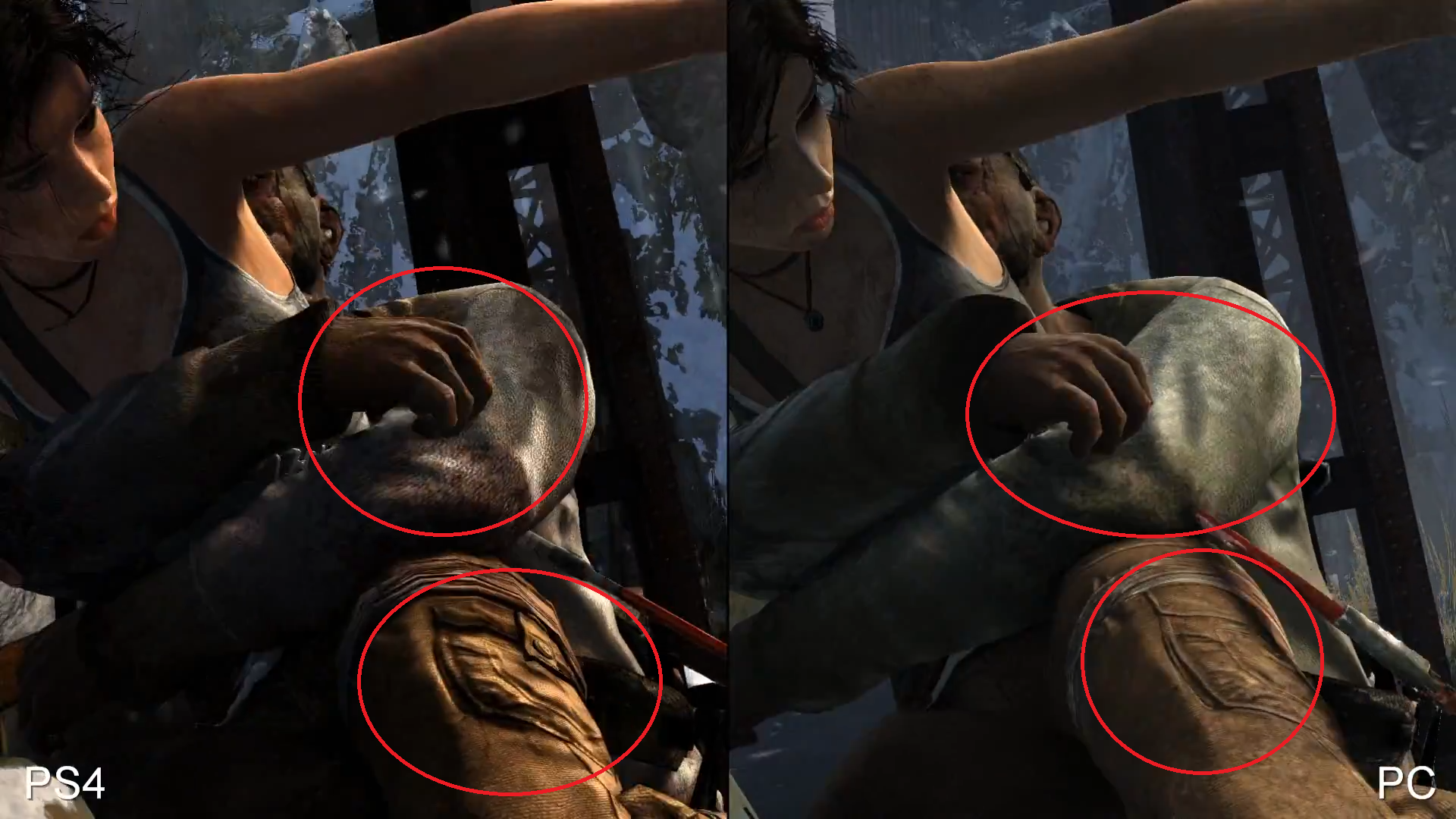

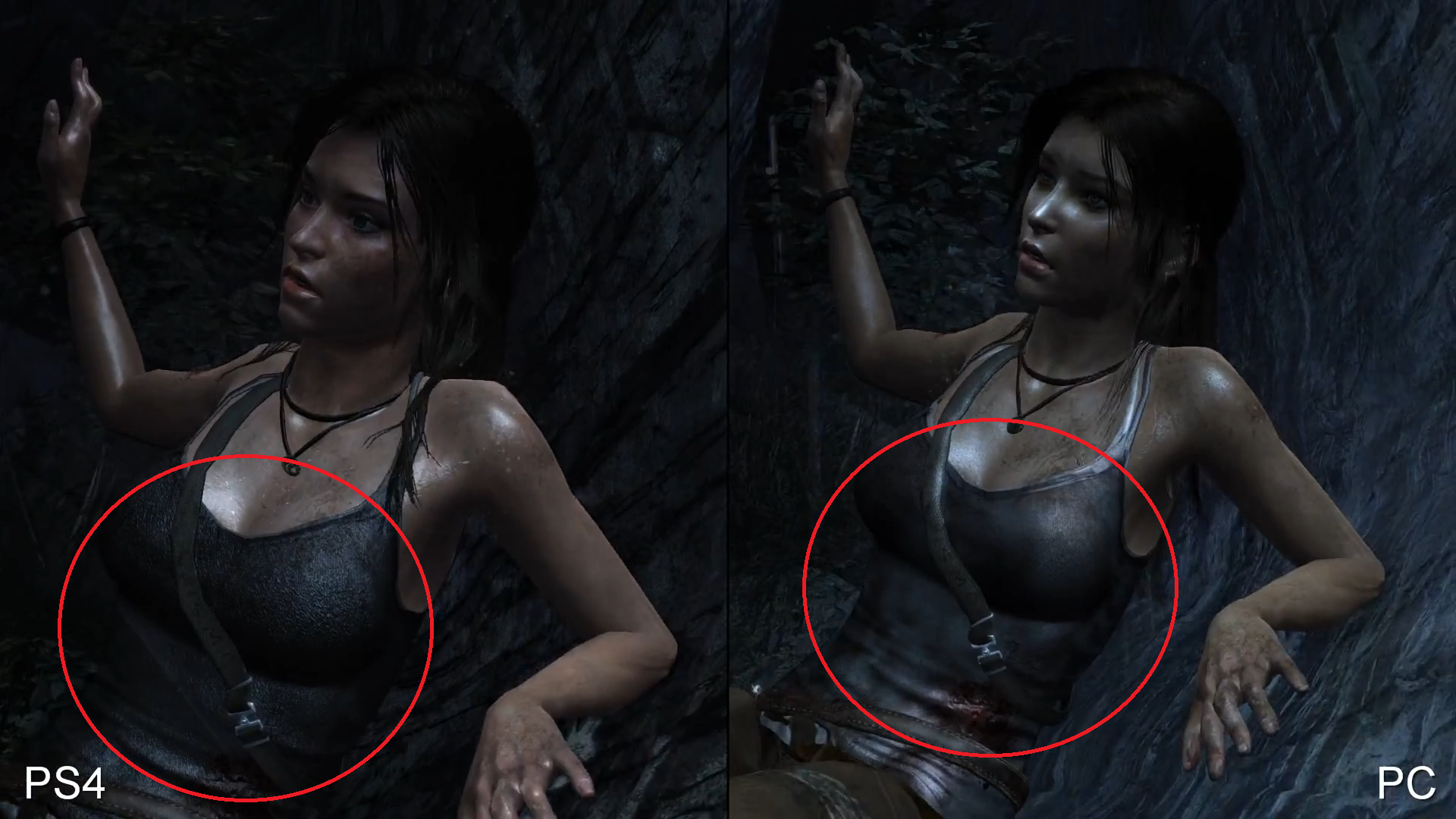

In the Eurogamer download high quality 1080p PlayStation 4 vs Xbox One video (172.2MB). At 4:55 even the "COVER" HUD text displayed during the Gameplay is clearly upscaled (right) when PS4 "COVER" hint is native:

At this precise moment Lara is running, it's 100% gameplay confirmed. Also the textures, geometry and assets are obviously upscaled, it's easily visible on the board right next to Lara.

Here another HUD text comparison during normal XB1 native image, the text on XB1 is perfectly identical and looks as native as PS4 text:

It's just about being exact. The game dynamically changes the resolution on the XB1 version also during gameplay. And Digital Foundry did miss it.

Congrats, you found the needle in the haystack.

I'm still confused if that's coming out of a cutscene though.

The actual clip in the video is literally only 2-3 seconds long and starts there. My guess due to the onscreen text cover command, is it is coming out of a cutscene. Even a bug maybe.

I'm going to say gameplay is 1080P if DF (and the GAF's fine toothed comb) didn't notice any other instances. DF has a ton of like for like comparison shots and does even one gameplay example show a res difference?

To label this game with the broad stroke of "dynamic resolution" on XBO seems simply untrue during gameplay, if it's only that one instance.

DF has certainly outed dynamic resolutions in games before, so I think they'd spot it.

but you are getting double the amount of temporal information,

Not double, the PS4 gameplay average was 50.98 FPS not 60. XBO average could also be significantly higher if it wasn't capped (not that it matters, since that would have caused judder despite more "temporal information")

I do wish we still had Lens of Truth or something around for a different, even if ham handed, look at multiplats. It's kinda scary DF is literally our only source for FPS info, even if they do a great job.