You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

itsmydamnation

Veteran

who said anything about memory channels?

who said anything about memory channels?

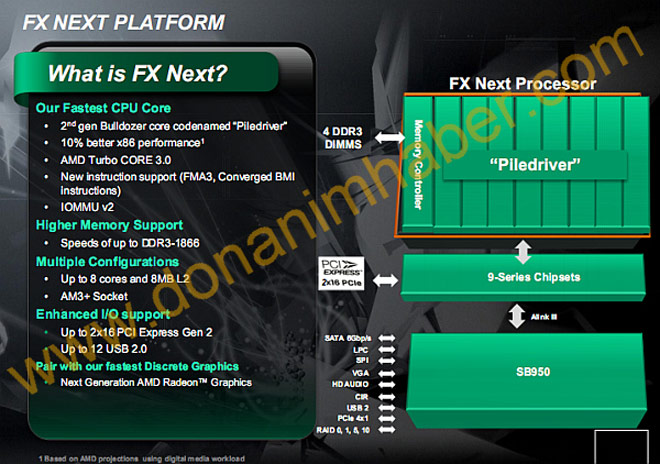

I think this is the source: http://www.cpu-world.com/news_2012/2012011101_Features_of_AMD_Piledriver_processors.html.

But yeah, the slide is ok on the other hand so I'll edit that post.

itsmydamnation

Veteran

I think this is the source: http://www.cpu-world.com/news_2012/2012011101_Features_of_AMD_Piledriver_processors.html.

But yeah, the slide is ok on the other hand so I'll edit that post.

from the updated SOG, it said that the 10core has 4 memory channels, but thats the SOC, the platform could be anything.

The memory controller in models 00h–0fh has two channels to DDR3 memory. The memory

controller in models 10h–1fh has two channels to DDR3 memory. The memory controller in models

20h–2fh has four channels to DDR3 memory.

What..does it mean Zambezi don't even support FMA? WTF

This is very likely a reference to FMA3.

Magnum_Force

Newcomer

I was reading a review on A8-3850 (Llano) yesterday when I came across the die shot of Llano, and I was just wondering something...

In place of the GPU on, you coulf fit 2 rather big cpu cores on the die, then it occurred to me all the current multicore processor are somewhat symetric - all their cores are the same and have the same capabilities as each other.

Would it possible to build an asymetric Processor, for example on Llano lets keep the 4 "STARS" cores, but in place of the GPU, put one powerful cpu core to use up the remaining space.

Granted, adding more STARS cores would be easier and most likely more effecient for multi threaded workloads, but this way you don't sacrifice single threaded performance, and the larger core could be used in a similar way the PPE is used in the Cell.

The llano is what...circa 230mm2 compared to a Phenom 2 x4 @ 250mm2.

Judging by anandtech's CPU benchmarks, their isn't too much in A8-3850 and a similarly clocked Phenom 2 x4 (but they might not tell the whole story).

If this all seems like nonsense them please forgive me, I am rather clueless when it comes to micro engineering

In place of the GPU on, you coulf fit 2 rather big cpu cores on the die, then it occurred to me all the current multicore processor are somewhat symetric - all their cores are the same and have the same capabilities as each other.

Would it possible to build an asymetric Processor, for example on Llano lets keep the 4 "STARS" cores, but in place of the GPU, put one powerful cpu core to use up the remaining space.

Granted, adding more STARS cores would be easier and most likely more effecient for multi threaded workloads, but this way you don't sacrifice single threaded performance, and the larger core could be used in a similar way the PPE is used in the Cell.

The llano is what...circa 230mm2 compared to a Phenom 2 x4 @ 250mm2.

Judging by anandtech's CPU benchmarks, their isn't too much in A8-3850 and a similarly clocked Phenom 2 x4 (but they might not tell the whole story).

If this all seems like nonsense them please forgive me, I am rather clueless when it comes to micro engineering

Or, to expound:

GPU workload is highly parallel work as there are millions of pixels to work on for a single frame of video. You could picture each pixel as a different work unit, all of which can be worked on at approximately the same time. This isn't a pure example and is oversimplified greatly, but nevertheless your GPU is a massive multicore processor.

Using that thought process, if you build a 'huge' GPU that had a super-fast single 'pipe' to process all of those tasks.it would still be a net loss in speed. It is far faster to have 400 individual 'cores' cooperatively process those million pixels than it would be to have one 'core' process them. But that doesn't mean you couldn't build one -- sure, you could. Just don't expect it to perform well, or be power efficient, or cheap.

This highlights the fundamental truth of the matter: there is only so fast a CPU or GPU can process a given request. Electrons flow at approximately the speed of light across the silicon substrate when unimpeded; adding additional distance for them to travel, or adding additional transistors to 'gate' them will result in a net speed loss. This means that any singular stream of instructions and data will always have an upper bound of how fast it can be clocked.

The problem then becomes finding a way to process faster without breaking the limits of physics, which is where companies stopped making single-core processors and starting building multi-core units instead. There may not always be a way to split work into separate individual streams or queues of work; some things must simply happen one-after-another. Intel has continued making forward movement on single-threaded performance, but the answer isn't just throwing more transistors at the problem. Much of Intels' work on IPC has surrounded the logic in the processor figuring out ways to break the serial stream of instructions down at a micro-op level and then handling (scheduling) those more efficiently to use the available resources. This logic can also do speculative work, somewhat 'guessing' at what data might be needed before the instruction actually asks.

To recap: multicore processing is a direct result of the finite limits on how fast a single stream of work can be computed. There is still more room to tweak single-core performance to be sure, but it's a known dead-end and is even now just visible on the horizon. The real work is finding ways to split that thread into smaller parts that can be distributed across multiple cores...

GPU workload is highly parallel work as there are millions of pixels to work on for a single frame of video. You could picture each pixel as a different work unit, all of which can be worked on at approximately the same time. This isn't a pure example and is oversimplified greatly, but nevertheless your GPU is a massive multicore processor.

Using that thought process, if you build a 'huge' GPU that had a super-fast single 'pipe' to process all of those tasks.it would still be a net loss in speed. It is far faster to have 400 individual 'cores' cooperatively process those million pixels than it would be to have one 'core' process them. But that doesn't mean you couldn't build one -- sure, you could. Just don't expect it to perform well, or be power efficient, or cheap.

This highlights the fundamental truth of the matter: there is only so fast a CPU or GPU can process a given request. Electrons flow at approximately the speed of light across the silicon substrate when unimpeded; adding additional distance for them to travel, or adding additional transistors to 'gate' them will result in a net speed loss. This means that any singular stream of instructions and data will always have an upper bound of how fast it can be clocked.

The problem then becomes finding a way to process faster without breaking the limits of physics, which is where companies stopped making single-core processors and starting building multi-core units instead. There may not always be a way to split work into separate individual streams or queues of work; some things must simply happen one-after-another. Intel has continued making forward movement on single-threaded performance, but the answer isn't just throwing more transistors at the problem. Much of Intels' work on IPC has surrounded the logic in the processor figuring out ways to break the serial stream of instructions down at a micro-op level and then handling (scheduling) those more efficiently to use the available resources. This logic can also do speculative work, somewhat 'guessing' at what data might be needed before the instruction actually asks.

To recap: multicore processing is a direct result of the finite limits on how fast a single stream of work can be computed. There is still more room to tweak single-core performance to be sure, but it's a known dead-end and is even now just visible on the horizon. The real work is finding ways to split that thread into smaller parts that can be distributed across multiple cores...

Last edited by a moderator:

Saw that 3 days ago. Surely it is imprecise; Vishera will not have 4 (!) memory channels being a desktop SKU.

Actually, the real question is why "Interlagos" is included as 2-channel memory, when it has 4. It might as well be true that next generation is 2-4 channels just like now.

Now one knows how to build a single CPU core the size of the GPU that's fast enough to be worth building. That's why we have quad core CPUs today instead of a single large core that's 4x as fast running single threaded code.

Why are you mean to the IBM z series? I can understand calling Itanium not worth it:smile:

AMD Reality Check at FXGamExperience: Users in a blind test choose the AMD FX over Intel SNB

Thoughts?

Thoughts?

AMD Reality Check at FXGamExperience: Users in a blind test choose the AMD FX over Intel SNB

Thoughts?

I'm surprised 5 people chose the i3.

Interesting, that the 8150 beat the 2700k, I wouldn't think there would be a lot of difference that could be discerned, although without knowing games and settings it could have been a somewhat stacked test.

They turned a CPU comparison into a GPU comparison, good marketing...Thoughts?