You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Stagnation in GPU and CPU market (Come AMD, we need you!)

- Thread starter Geeforcer

- Start date

Nothing, really. Thanks. I just remembered some age old discussions from way back when regarding whether something was 'properly rotated' by using two corners of an ordered grid when you weren't really able to... err... rotate it. What was the baseline? The whole nomenclature never made much sense to me as ATIs 4X at the time wasn't really 'rotated' either, was it? More like offset and skewered? Or, as ATI (IIRC) liked people to say: simply 'programmable'. (Was it?)

Sorry for straying off topic.

Sorry for straying off topic.

My only beef with the GeForce 3 was combining AA and AF together -- even with x2AA. With Accuview with the GeForce 4 -- drastically improved x2 AA performance with filtering and made it much more enjoyable because of the gains with Accuview. X4 ordered grid AA on both products sucked --not enough quality for the performance hit. Loathe Ordered Grid.

Granted, the quality of the GeForce3, 4, 5 series offering angle invariant filtering abilities; it was still only x8 and the hit was large but hey ya can't have everything.

It's nice to see angle invariant filtering x16 with both IHV's now. nVidia seems to be the leader in filtering right now. Even though filtering is welcomed can't wait for developers to add AA through the shaders to combat the aliasing that filtering doesn't do a great job of.

Is there really a big lay off?

A bit. But there was still the Ultra that was released around 6 months later. The years not done yet.

The difference with the 9700 pro/9800 pro/9800XT were not huge and around a year-and-a-half time-line; with nVidia not really offering a compelling solution over this architecture.

This is what it feels like to me right now: nVidia's architecture was just so dominating over the competition -- late product to boot from ATI and not too compelling. Around a year-and-a-half time-line of nVidia dominating and not really destroying the original G-80? Possible. This seems to happen with the great products and surprises that hit the market like the 9700 Pro and the 8800 GTX. The products were so good they're tough to really defeat even after a year.

Can understand on how the high-end enthusiast that did purchase G-80's and crave more performance for titles like Crysis or DirectX 10. Money to burn and no product worthy of really replacing it -- except maybe multi-GPU's over-all.

Granted, the quality of the GeForce3, 4, 5 series offering angle invariant filtering abilities; it was still only x8 and the hit was large but hey ya can't have everything.

It's nice to see angle invariant filtering x16 with both IHV's now. nVidia seems to be the leader in filtering right now. Even though filtering is welcomed can't wait for developers to add AA through the shaders to combat the aliasing that filtering doesn't do a great job of.

Is there really a big lay off?

A bit. But there was still the Ultra that was released around 6 months later. The years not done yet.

The difference with the 9700 pro/9800 pro/9800XT were not huge and around a year-and-a-half time-line; with nVidia not really offering a compelling solution over this architecture.

This is what it feels like to me right now: nVidia's architecture was just so dominating over the competition -- late product to boot from ATI and not too compelling. Around a year-and-a-half time-line of nVidia dominating and not really destroying the original G-80? Possible. This seems to happen with the great products and surprises that hit the market like the 9700 Pro and the 8800 GTX. The products were so good they're tough to really defeat even after a year.

Can understand on how the high-end enthusiast that did purchase G-80's and crave more performance for titles like Crysis or DirectX 10. Money to burn and no product worthy of really replacing it -- except maybe multi-GPU's over-all.

Last edited by a moderator:

Silent_Buddha

Legend

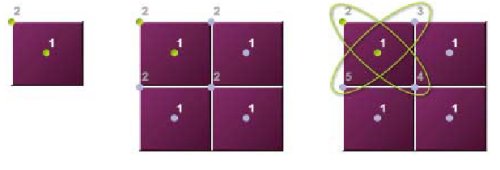

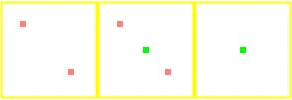

Define 'rotated'. Relative to what?

GF3 sample pattern (2X and QX):

GF4 sample pattern (2X and QX):

R9700 sample pattern (2X):

FX5800 sample pattern (2X):

FX5900 sample pattern (2X):

Source for all images: B3D

So you're saying that RGMS AA is the same as RGSS AA? Again, let's not let those facts get in the way, eh?

While RGMS AA does a wonderful job for the relatively small performance hit compared to any SS AA implementation, it's still a far cry from the quality of RGSS AA. The nice thing of which AF generally wasn't needed (again IMO) with RGSS AA. The proof in my eyes at the time was that Geforce 3 and Geforce 4 with 4xAA and the max AF you could choose at the time still didn't do as good a job with ground textures shimmering and with edge crawling on polygon edges.

And if it was limited to 2xRGMS AA while it used OGSS for higher AA samples, that still wouldn't invalidate my argument with regards to overall IQ.

And for the record, I thought AF on the Radeon 9700 pro was worse also. However, compared to Nvidia at the time, it was generally much more useable when combined with 4xAA. But overall I still felt that 4xAA + 16xAF on the R9700 pro was worse than just 4xRGSS AA on the V5 5500. Speed on the other hand was orders of magnitude higher by that point in time.

Regards,

SB

And if it was limited to 2xRGMS AA while it used OGSS for higher AA samples, that still wouldn't invalidate my argument with regards to overall IQ.

Yes, it does, because the GF3 had the processing power to run games at much higher resolutions. I went from a V5 to a GF3 in late 2001 titles like DAoC looked much, much better at 1600x1200 with 2x AA and anisotropioc filtering than anything the V5 could output. You're simply focusing your argument solely on texture shimmering as the end-all, be-all of image quality because it's the only aspect of the V5's output that's even remotely defensible when comparing against a GF3. And to do so you're ignoring the fact that there was slight texture blurring from the super-sampling, and you were starring at MIP map lines all the time, and noticeably blurry textures once you crossed that first line.

I was a huge 3dfx fan and I even wrote for their 3dfxgamers.com site, but it's 2007 now and technology has progressed. Let it go.

So you're saying that RGMS AA is the same as RGSS AA? Again, let's not let those facts get in the way, eh?

Sigh..

Geeforcer said:GF3 and GF4 had 2x rotated grid AA (RGMS) which provided largely identical edge aliasing reduction to 2x RGSS of V5 while AF filtering did a better job of clearing up the textures then 2s SS + bilinear filtering ever could.

What is the point of this argument again? Voodoo 5 is better than GF3? Maybe it is for retro gaming, where you can run Glide games with AA.

But if you want to play a game that uses T&L heavily or uses shaders, you aren't going to want a Voodoo 5. Just from a simple performance standpoint, Voodoo 5 can not compete at all. From a quality and feature standpoint, other than its interesting (but slow) AA capability, it's missing bunches of features.

It sure was a disappointment to see how awful the 2D output remained on GF3 though. I skipped every GeForce from 2-5 because of how blurry the earlier cards were. I went through the Radeons, from the original to the 9700. They all had excellent 2D. I finally got my first GF card in years with my laptop and its 6800 Go, back in '04. And 6800 has its own problems when it comes to texture filtering (compared to 9700 and X800).

Voodoo5, on the other hand, while lacking a zillion 3D features because of the company's inability to make progress, had fantastic 2D quality. I picked one up a couple of years ago for a Win98 rig. However, I've found that a GeForce FX 5600 I have can be a better choice, with its T&L/DX8, AF and AA support in combination with a Glide wrapper or OpenGL renderer replacement (UT). NV's OpenGL support is also better than 3dfx's ever was.

But if you want to play a game that uses T&L heavily or uses shaders, you aren't going to want a Voodoo 5. Just from a simple performance standpoint, Voodoo 5 can not compete at all. From a quality and feature standpoint, other than its interesting (but slow) AA capability, it's missing bunches of features.

It sure was a disappointment to see how awful the 2D output remained on GF3 though. I skipped every GeForce from 2-5 because of how blurry the earlier cards were. I went through the Radeons, from the original to the 9700. They all had excellent 2D. I finally got my first GF card in years with my laptop and its 6800 Go, back in '04. And 6800 has its own problems when it comes to texture filtering (compared to 9700 and X800).

Voodoo5, on the other hand, while lacking a zillion 3D features because of the company's inability to make progress, had fantastic 2D quality. I picked one up a couple of years ago for a Win98 rig. However, I've found that a GeForce FX 5600 I have can be a better choice, with its T&L/DX8, AF and AA support in combination with a Glide wrapper or OpenGL renderer replacement (UT). NV's OpenGL support is also better than 3dfx's ever was.

What is the point of this argument again? Voodoo 5 is better than GF3? Maybe it is for retro gaming, where you can run Glide games with AA.

But if you want to play a game that uses T&L heavily or uses shaders, you aren't going to want a Voodoo 5. Just from a simple performance standpoint, Voodoo 5 can not compete at all. From a quality and feature standpoint, other than its interesting (but slow) AA capability, it's missing bunches of features.

It sure was a disappointment to see how awful the 2D output remained on GF3 though. I skipped every GeForce from 2-5 because of how blurry the earlier cards were. I went through the Radeons, from the original to the 9700. They all had excellent 2D. I finally got my first GF card in years with my laptop and its 6800 Go, back in '04. And 6800 has its own problems when it comes to texture filtering (compared to 9700 and X800).

Voodoo5, on the other hand, while lacking a zillion 3D features because of the company's inability to make progress, had fantastic 2D quality. I picked one up a couple of years ago for a Win98 rig. However, I've found that a GeForce FX 5600 I have can be a better choice, with its T&L/DX8, AF and AA support in combination with a Glide wrapper or OpenGL renderer replacement (UT). NV's OpenGL support is also better than 3dfx's ever was.

I still wanna know where people are buying these bad GF based cards with crappy 2D quality. I've owned atleast 1 GF card from each line made and none of them ever had crappy 2D quality. Text was always crisp, clean and clear for me on my 17" and later on 21" monitors and 1600x1200x32bit resolutions.

Everything is subjective.

High resolutions like 1600 x 1200 with 32-bit colour with x2 RGMS AA with x2 trilinear AF looked better ( to me) over-all in most cases than 1024 x 768 22 bit colour with x2 RGSS. These would of been useable/enjoyable settings on the GeForce 3 and Voodoo 5 in many titles.

However if it was apples-to-apples IQ 1600 x 1200 with x2 RGSS over-all compared to x2 RGMS AA with x2 trilinear AA -- RGSS all day long -- but performance needs to be part of the equation.

On anisotropy with the 9700?

It offered much more quality than the 8500 though ( which was bilinear with some messy mip-map noise at times depending on texture ( this was helped a lot by adding a x2 SSAA setting that virtually cleaned most of this noise -- except the worse case examples)

Even though the 9700's filtering didn't offer the quality as nVidia's quality settings -- ATI's trilinear AF this time was fast with most of the mip-map noise gone. With the combination of the quality patterns with AA and the ability to raise the resolution( more performance) offered superior IQ over-all.

There is always apples to apples IQ comparisons and welcomed -- then there is the combination factor obviously: resolution/AA/AF/performance.

I still feel quality SSAA rocks though and was still offered today considering how powerful these cards are -- can still be enjoyed for gamers that have a library of titles and not playing the greatest and latest titles like Crysis. They're thousands of gaming titles......performance would be great for many of them. Understand why it's not offered but hey it's my view.

High resolutions like 1600 x 1200 with 32-bit colour with x2 RGMS AA with x2 trilinear AF looked better ( to me) over-all in most cases than 1024 x 768 22 bit colour with x2 RGSS. These would of been useable/enjoyable settings on the GeForce 3 and Voodoo 5 in many titles.

However if it was apples-to-apples IQ 1600 x 1200 with x2 RGSS over-all compared to x2 RGMS AA with x2 trilinear AA -- RGSS all day long -- but performance needs to be part of the equation.

On anisotropy with the 9700?

It offered much more quality than the 8500 though ( which was bilinear with some messy mip-map noise at times depending on texture ( this was helped a lot by adding a x2 SSAA setting that virtually cleaned most of this noise -- except the worse case examples)

Even though the 9700's filtering didn't offer the quality as nVidia's quality settings -- ATI's trilinear AF this time was fast with most of the mip-map noise gone. With the combination of the quality patterns with AA and the ability to raise the resolution( more performance) offered superior IQ over-all.

There is always apples to apples IQ comparisons and welcomed -- then there is the combination factor obviously: resolution/AA/AF/performance.

I still feel quality SSAA rocks though and was still offered today considering how powerful these cards are -- can still be enjoyed for gamers that have a library of titles and not playing the greatest and latest titles like Crysis. They're thousands of gaming titles......performance would be great for many of them. Understand why it's not offered but hey it's my view.

Voodoo5, on the other hand, while lacking a zillion 3D features because of the company's inability to make progress, had fantastic 2D quality.

It certainly failed and couldn't compete with hardware T&L benches at the time but to me started the ball rolling on the Cinematic feel for 3d. The ability to help clean a moving screen and make the game look more real.........simply was the future for many gamers. Gamers would be talking about FSAA -- they just didn't know it yet at that time.

Also talk of Motion Blur, Soft reflections and Shadows, Depth of Field in games were just ignored as cinematic effects back then but now titles are truly adding these ideas in our games to feel more cinematic.

The Voodoo5 was the the worse marketing card in the history of 3d -- but damn it -- was a nice gaming card though.

MulciberXP

Regular

I still wanna know where people are buying these bad GF based cards with crappy 2D quality. I've owned atleast 1 GF card from each line made and none of them ever had crappy 2D quality. Text was always crisp, clean and clear for me on my 17" and later on 21" monitors and 1600x1200x32bit resolutions.

It's in our collective imagination and in the imagination of reviewers across the world. If you say how great yours was over and over, maybe we'll all realize that they actually DID have good 2d image quality.

The majority of AIBs put shitty filters on GF1-3 cards - that's where the general consensus that they had crappy 2D comes from (high quality were used on higher priced ones, though)

With the GF4, nvidia had a reference implementation that everyone used, and was much, much improved.

I have a GF2 with excellent 2d - made by Gainward. Still runs, AFAIK.

With the GF4, nvidia had a reference implementation that everyone used, and was much, much improved.

I have a GF2 with excellent 2d - made by Gainward. Still runs, AFAIK.

Silent_Buddha

Legend

Actually my recollection back that was that prior the Geforce 4, all nvidia cards had a really low quality and low frequency RAMDAC, including the reference card.

What I can't remember however, was if the RAMDAC was integrated onto the video processor or not? And I'm too lazy to look it up right now.

The quality and speed of the RAMDAC was greatly increased with the Geforce 4 and beyond, however quality still wasn't on par with ATI and Matrox in that area, but was more than good enough for me considering the speed of the card compared to its competition.

IQ still left a lot to be desired though, IMO. However, at this point, as Sir Pauly stated above, there was no way even at low resolutions for the V5 to compete at anything resembling playable speeds on new titles. Ergo, why I had a second machine with the V5 dedicated to playing older or non-demanding titles.

Still, I never did stop comparing the V5 side by side with all cards I bought to see if overall scene IQ improved, in titles that would actually run no matter how slowly.

I gave that up about 6 months into my purchase of a 9700pro however as it was becoming more of a hassle trying to get the thing to run on newer games.

Regards,

SB

What I can't remember however, was if the RAMDAC was integrated onto the video processor or not? And I'm too lazy to look it up right now.

The quality and speed of the RAMDAC was greatly increased with the Geforce 4 and beyond, however quality still wasn't on par with ATI and Matrox in that area, but was more than good enough for me considering the speed of the card compared to its competition.

IQ still left a lot to be desired though, IMO. However, at this point, as Sir Pauly stated above, there was no way even at low resolutions for the V5 to compete at anything resembling playable speeds on new titles. Ergo, why I had a second machine with the V5 dedicated to playing older or non-demanding titles.

Still, I never did stop comparing the V5 side by side with all cards I bought to see if overall scene IQ improved, in titles that would actually run no matter how slowly.

I gave that up about 6 months into my purchase of a 9700pro however as it was becoming more of a hassle trying to get the thing to run on newer games.

Regards,

SB

Nah, they had a fully-integrated 350 MHz RAMDAC in GeForce 2. That's just shy of the 360 MHz RAMDAC in a G400 MAX. Even Riva 128 has a integrated RAMDAC. You'll find a separate RAMDAC for the second display on lots of these GeForce cards though because the chips only have a single RAMDAC.Actually my recollection back that was that prior the Geforce 4, all nvidia cards had a really low quality and low frequency RAMDAC, including the reference card.

What I can't remember however, was if the RAMDAC was integrated onto the video processor or not? And I'm too lazy to look it up right now.

http://www.digit-life.com/articles/sumageforce2gts/index.html

The quality problems simply came down to cheap board components.

http://www.maxuk.net/241mp/geforce-image-quality.html

As swaaye was saying, Nvidia's 2D quality woes were due to the fact that they would not tighten up component specks, this giving their partners a leeway to use as good or bad ones as they wished, while everyone else was making their own cards at that time (Matrox, 3dfx, ATI). I had two GF3s: MSI which has very bad 2D and consequently went back at and Visiontek, which was crisp and clear.

Silent_Buddha

Legend

Ah, thanks for clearing that up.  I remembered it was "something" that was low quality/low speed but overtime my memory just stuck RAMDAC into that slot.

I remembered it was "something" that was low quality/low speed but overtime my memory just stuck RAMDAC into that slot.

Regards,

SB

Regards,

SB

Similar threads

- Replies

- 2K

- Views

- 190K

- Replies

- 2K

- Views

- 421K

- Replies

- 70

- Views

- 19K

- Replies

- 871

- Views

- 219K