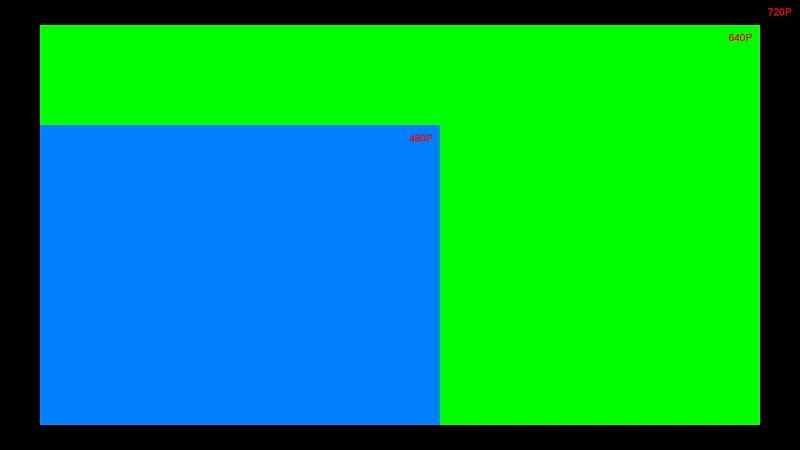

I think a lot of people are confused and think there's a bigger difference between 640P and 720P than there really is. It is much, much better than SD. Just to illustrate:

Before I resized:

http://img.photobucket.com/albums/v333/hardknock/DifRes.jpg

The fact that it's being upscaled to 720p I'd reckon nobody would have been able to tell the difference between it and the original 720p image given Halo's art direction (not so detailed textures).

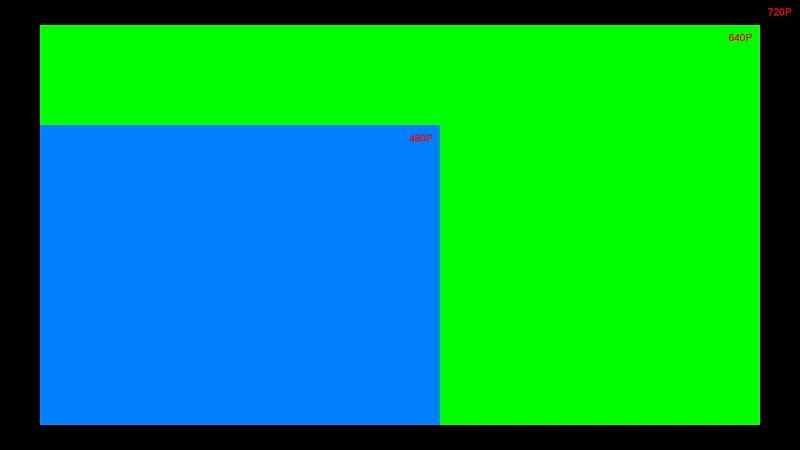

Before I resized:

http://img.photobucket.com/albums/v333/hardknock/DifRes.jpg

The fact that it's being upscaled to 720p I'd reckon nobody would have been able to tell the difference between it and the original 720p image given Halo's art direction (not so detailed textures).