btw..hasn't anyone noticed that according the presentation they are actually doing supersampling in the lighting pass? not going to give any particular advantage as sub samples will mostly have the same values per pixel but if they implement a more clever scheme for the lighting pass they will likely see big speed improvements.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Killzone 2 technology discussion thread (renamed)

- Thread starter Terarrim

- Start date

- Status

- Not open for further replies.

Maybe they have edge almost on every pixel so it was not worth it - that would be in line with that 1mil triangles. Joking of course...btw..hasn't anyone noticed that according the presentation they are actually doing supersampling in the lighting pass? not going to give any particular advantage as sub samples will mostly have the same values per pixel but if they implement a more clever scheme for the lighting pass they will likely see big speed improvements.

Hm, you are right - 2xMSAA makes indeed a difference here. But still I wonder how it compares (bandwidth-wise) to bilinear or anisotropic filtering on all texture reads for each pixel & pass in forward render. I have to calculate that.

I wonder if the bandwidth is why it's not 60fps.

Also consider that they have to fill the G-buffer for each frame. It's a quite large buffer, already two and a half times as big as a simple forward renderer with single pass lighting... and then a forward rendering pass...

Then again, it seems they've only dropped specular color and reflections, which would only be important for some materials (metals work better with it, for example), and apart from the 2x AA they can also implement an edge blur filter based on the depth/normals pass, which would smooth out the image a bit more (a common trick in offline CG compositing, too). Or they might already do this, considering the low amount of aliasing in the screenshots.

And also, they've made a very clever artistic decision with the post processing pass, which can greatly enhance the results and give it more of a stylized, offline CG-look. I'd even risk that id added their post stuff to Rage after seeing this - they've mentioned that it was a recent and overnight development

I'd run a full screen pass that read all the subsamples of one particular g-buffer parameter (say albedo) and that generates a stencil mask that can used later to early reject all those pixels that don't have the same subsamples, so that you could shade every light twice, one pass processes only one subsamples, the other one works on both subsamples (the same mask could be reaused for deferred shadowin as well..cutting shadowing times)....hit Submit instead of Preview...

nAo - how would you do this? Shader branching or somewhere outside the shader?

Presentation claims that they get optimization in sharing shadow taps between samples to get performance comparable with non-MSAA case.

A shader that generates such a mask would be something like that:

Code:

half4 generateStencilEdgesMask( sampler2D multisampledBuffer, float2 uvLeft : TEXCOORD0, float2 uvRight : TEXCOORD1)

{

half3 leftSample = tex2D( multisampledBuffer, uvLeft);

half3 rightSample = tex2D( multisampledBuffer, uvRight);

if ( leftSample != rightSample)

discard;

return half4(0.0f,0.0f,0.0f,0.0f);

}Obviously this stuff is not meant to work for real, it's purely fictional, but it should give a rough idea of what I'm talking about.

EDIT: just realized that this stuff only works if current multisampling implementations supersample depth AND stencil, and not just depth. Unfortunately I dont' know if they do that, though it would make a lot of sense

I'd would say that their post processing stuff is the most innovative part of their work..too bad they didn't talk much about itAnd also, they've made a very clever artistic decision with the post processing pass, which can greatly enhance the results and give it more of a stylized, offline CG-look. I'd even risk that id added their post stuff to Rage after seeing this - they've mentioned that it was a recent and overnight development

"Nerve-Damage"

Regular

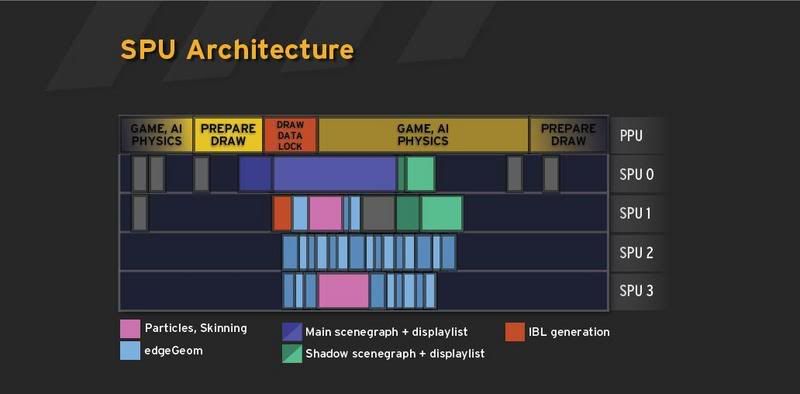

So all physics on the PPU, and two SPU not in use? Does the blanks mean that the SPUs idles alot?

Same thing that I was thinking. Lots of idle time indeed...

* SPU 4???

* SPU 5???

* SPU 6 (OS)

Seems like presentations from Develop were posted online while I was on a vacation. Good to have something to read during the evenings.

http://www.develop-conference.com/d.../vwsection/Deferred Rendering in Killzone.pdf

Their G-Buffer are 5 INT8 textures? Or am I in the wrong movie?

Thanks, this is what I was missing in my bandwidth calculations completelly!Laa-Yosh said:Also consider that they have to fill the G-buffer for each frame.

As far as I remember the talk, he said that game, AI and physics all run number of own SPU tasks and the gray boxes supposely are these tasks. He did not focus on this part at all (as not being part of rendering) so I suppose it's there to just give a rough idea that there is SPU activity before PPU hits the rendering part. I would say physics of that scale has to be on SPU.So all physics on the PPU, and two SPU not in use? Does the blanks mean that the SPUs idles alot?

The flow of that part of presentation was roughly:

"PPU orchestrates game logic, AI and physics (with their own SPU tasks), then there is time to prepare draw data that changed (again with some SPU tasks). Display list generator is launched as soon as possible on SPU and it launches own sub-tasks that do skinning, edge geom... then there is shadow map rendering in parallel. And in the meantime PPU moves to update logic for next frame (the red lock thing + next bars on PPU side)..."

As I remember it all of the "colored" SPU bars belong to rendering of one frame (so not to the PPU stuff after (and including) the "data lock" bar) - so rendering leaves the PPU at single point during the "Prepare Draw" bar and PPU can do general stuff for next frame.

He did not mention anything about the picture actually showing real load and to me it just looks like very rough high level overview for the masses.

Fran was there as well so he might fill what I missed.

Thanks nAo. That sounds like very practical and logical solution. I wonder - maybe they were doing it already but traded it for early stencil culling used for light volume and "sun" (considering how they rely on early stencil culling in light pass).I'd run a full screen pass that read all the subsamples...

I would say both are proper per-sample on edges (depth and stencil are stored 32bits in the end).nAo said:EDIT: just realized that this stuff only works if current multisampling implementations supersample depth AND stencil, and not just depth. Unfortunately I dont' know if they do that, though it would make a lot of sense

Same thing that I was thinking. Lots of idle time indeed...

* SPU 4???

* SPU 5???

* SPU 6 (OS)

Obviously they've only talked about rendering related stuff, the rest of the SPU processing time is dedicated to game code, animation blending, AI and such...

HumbleGuy: Thanks for clearing some things up, I would guess that either they have lots of room for improvement or don't talk about the entire engine in that slide, where the latter is the most logical.

The todo-list sounds like some pretty workheavy stuff for the engine.

Are there any games out now that have "contact shadows". It should improve object belonging to the world i'd reckon. Characters really touching the surfaces and such?Still a lot of features planned

‣ Ambient occlusion / contact shadows

‣ Shadows on transparent geometry

‣ More efficient anti-aliasing

‣ Dynamic radiosity

The todo-list sounds like some pretty workheavy stuff for the engine.

I'd would say that their post processing stuff is the most innovative part of their work..too bad they didn't talk much about it

To me it seems that it's the result of Guerilla taking a long, thorough look at the compositing pipeline at Axis Animation, about what they've done to their raw renders for the E32005 trailer. Color correction can go a long way in the hands of some good artists, and it helps to get the look of separately textured assets more consistent. You can also use it to simulate fog and atmosphere.

I can check out what our compositors are doing in more detail on monday if you're interested

HumbleGuy: Thanks for clearing some things up, I would guess that either they have lots of room for improvement or don't talk about the entire engine in that slide, where the latter is the most logical.

Are there any games out now that have "contact shadows". It should improve object belonging to the world i'd reckon. Characters really touching the surfaces and such?

The todo-list sounds like some pretty workheavy stuff for the engine.

There are games out there that does 'contact shadows'.

yep, one 32bit z buffer and four RGBA8 color buffers, times 2, as they have 2x multisampling.Their G-Buffer are 5 INT8 textures? Or am I in the wrong movie?

That's 40 bytes per pixel -> 36 megs at 720p resolution

Note from the SPUs usage slide that they use EDGE to cut the number of triangles pushed to RSXObviously they've only talked about rendering related stuff, the rest of the SPU processing time is dedicated to game code, animation blending, AI and such...

Obviously they've only talked about rendering related stuff, the rest of the SPU processing time is dedicated to game code, animation blending, AI and such...

The PDF specifically mentions Game AI Physics within the PPU and 4 SPE's.

yep, one 32bit z buffer and four RGBA8 color buffers, times 2, as they have 2x multisampling.

That's 40 bytes per pixel -> 36 megs at 720p resolution

HOLY COW !!! what does it all mean though?

I think they just went for the most straightforward solution, the game will be out next year and they still have time to implement more clever AA schemes, the nice thing is how flexible a last year console can be compared to even more modern DX10 hw.Thanks nAo. That sounds like very practical and logical solution. I wonder - maybe they were doing it already but traded it for early stencil culling used for light volume and "sun" (considering how they rely on early stencil culling in light pass).

It means that a deferred renderer consumes more frame buffer memory than a common forward rendererHOLY COW !!! what does it all mean though?

- Status

- Not open for further replies.

Similar threads

- Replies

- 21

- Views

- 1K

- Replies

- 26

- Views

- 2K

- Replies

- 21

- Views

- 6K

- Replies

- 9

- Views

- 1K

- Replies

- 1K

- Views

- 79K