There are a lot of weird behaviors in this demo that are still unaccounted for. The game reads through 65GB in a few minutes while standing still, for example.

I thought it might be loading/evicting data in that scene so I set up a RAM cache to see how large it was. But I had to stop at 24GB because it seems to read through the entire 40GB data files over and over.

I have also seen this behaviour in the final game. Just sitting still seeing a constant 500 mb/s on the NVme for no reason. This happens for mintues on end. This screenshot was taken with me having sat still here for about 4 minutes and it was doing 500-530 mb/s the entire time.

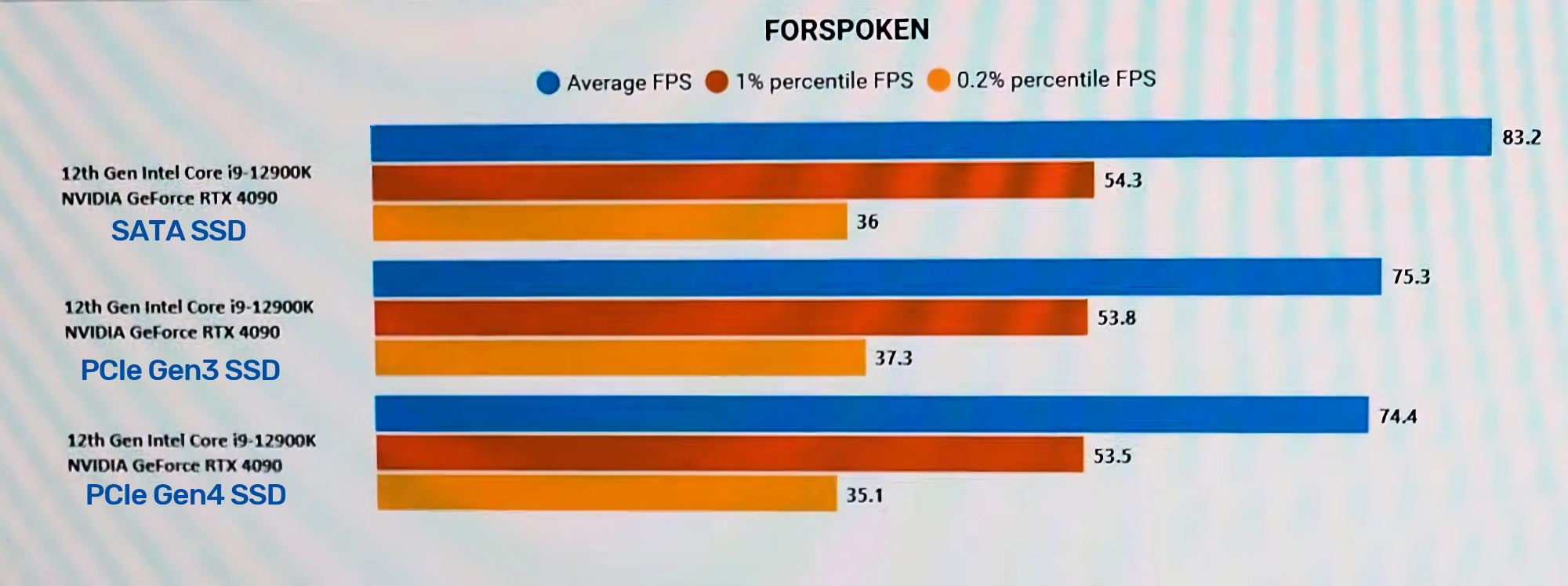

Which makes this seem all the stranger:

Why would a SATA drive have a higher FPS (implying the NVMe is causing a higher decompression load due to it's greater throughput) if the streaming load is within a SATA drives transfer limits of 550MB/s? Or does the streaming load increase when moving through the world?