DegustatoR

Veteran

Who says that it's worse?RDNA2 clock up to 2,8GHz on 7nm , I don´t see any valid point from you, why it´should be worse on 5nm node beside some architectural glitches or design flaw....

Who says that it's worse?RDNA2 clock up to 2,8GHz on 7nm , I don´t see any valid point from you, why it´should be worse on 5nm node beside some architectural glitches or design flaw....

yes , they did and it din´t help Vega to become better gaming GPU, because despite higher clock, the limitations due architectural design choices still remainedwhich actually proves my point, that RDNA3 clock is not limited by process node .-) RDNA2 clock up to 2,8GHz on 7nm , I don´t see any valid point from you, why it´should be worse on 5nm node beside some architectural glitches or design flaw....

64 for VS/GS/PS as indicated by Mesa RADVWhat do we know about wave size for compute shaders? Some people assume it's 32, others 64.

Afaik the compiler decides arbitraily in a black box, but not sure to which shader stages this applies.

64 for VS/GS/PS as indicated by Mesa RADV

Controllable via VK_EXT_subgroup_size_control apparently.

OMG, are you just suggesting another soon to be realeased part with more clock/more WGPs? Or some kind of 295X2 style multicore solution?No.

The reality is a lot funnier than that.

Letsa call it 7970XTX 3GHz edition and throw nostalgiabuxx at the screen.

RX-816 – Based on AMD internal analysis November 2022, on a system configured with a Radeon RX 7900 XTX GPU, driver 31.0.14000.24040, AMD Ryzen 9 5900X CPU, 32 GBDDR4-7200MHz, ROG CROSSHAIR VIII HERO (WI-FI) motherboard, set to 300W TBP, on Win10 Pro, versus a similarly configured test system with a 300W Radeon 6900 XT GPU and driver 31.0.12019.16007, measuring FPS performance in select titles. Performance per watt calculated using the total board power (TBP) of the AMD GPUs listed herein. System manufacturers may vary configurations, yielding different results.

The annotated GCD die shot (provided by AMD tho) doesn't show any large interface for GCD-GCD communication.OMG, are you just suggesting another soon to be realeased part with more clock/more WGPs? Or some kind of 295X2 style multicore solution?

Consider that at best he's the amd version of eastman, at worst just an attention seeker making things on the spot.OMG, are you just suggesting another soon to be realeased part with more clock/more WGPs? Or some kind of 295X2 style multicore solution?

In some titles that are completely RT limited, probably (MInecraft RT?). In the others the pipeline has likely other bottlenecking factors, and you will see the improvement leaning more towards the raster factor increase.I find the lack of ray tracing specific performance boosts weird considering AMD talks about a 50% increase in performance per CU and the 7900 XTX has 20% more CUs. So at least in some titles we should be seeing an 80% increase in RT performance.

I'd expect the opposite - bigger gains in "lite RT" titles than in heavy path traced ones.In some titles that are completely RT limited, probably (MInecraft RT?). In the others the pipeline has likely other bottlenecking factors, and you will see the improvement leanong more towards the raster factor increase.

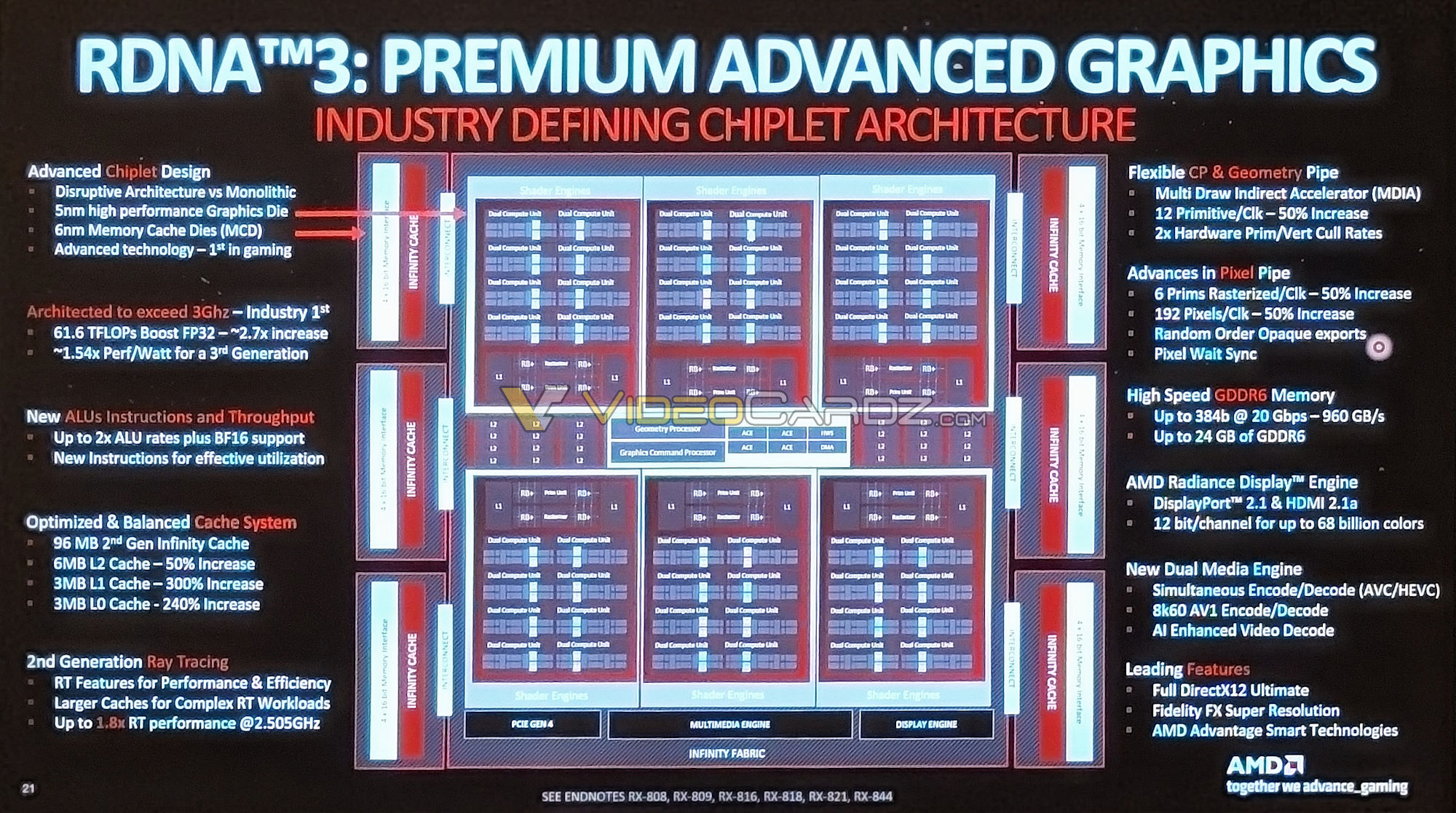

But it didn't because stuff happened.architecture "to exceed 3GHz" confirmed

I'm not sure what that means - could you give an example of a game where this could possibly be the case?

Like the 7900 is not a non-RT GPU, it's just a GPU who's RT performance will likely only match a 2-year old Ampere 3080. That's definitely disappointing, but it's far, far above the RT performance of the S, X and PS5. The main advantage of the PC is choice, you're not restricted into what the developer personally felt were the right compromises for a particular framerate. There is not going to be something released within this console generation that will only run well on Ada, as that would be financial suicide for the developer.

The argument isn't that "RT is worthless and has no future" - the argument is that the sacrifices it brings to performance and resolution are too great for the small improvements in brings in many of the titles currently - at least at the framerate standards that have been raised considerably in the past 5 years. You can mitigate those sure - but the actual cost in $ is far too high for the majority of PC gamers.

If the 4070-whatever comes out in 2023, and is in the same price ballpark of the 7900, has a 20% raster disadvantage but a 100% RT advantage, then you can definitely make the argument that it's a shortsighted decision to go with Radeon - like it would have been when Ampere was introduced and the 6800/6900 series were almost priced identically, which explains why Nvidia completely took over in marketshare (that and in many games, you could argue it was also much faster in raster due to DLSS). We'll see with actual benchmarks I guess, I don't think the 4080 will necessarily put a cork in this argument though.

You get that with a 7900 series card though, in spades. And you can get that without RT as well - ports like God of War, Days Gone etc have significant raster improvements even outside of framerate/resolution. Again I don't think anyone is arguing that there is no point to ray tracing at all, just that the cost to get a product that can run full-RT games at the resolutions/framerates many gamers want is too high atm, so the sacrifice to that area of performance may be more acceptable if the other 2 can be met at a more reasonable price point.

What are the Ada rates for the metrics listed under "CP & Geometry" and "Pixel Pipe"?

architecture "to exceed 3GHz" confirmed

True, though it makes you wonder about corsairs 600W 12vhpwr connector as it only has 2 8pins.That might be ok if people are aware of it in relation to PSUs and before they specified this in detail in 2018. The R9 is from 2014 so during the wild west period.

AD102 has 12 rasterizer (GPCs),144 geometry units (TPC) and 16 ROPs per GPC (192). A 4090 with 2750MHz (real clock rate) can process 33 billion triangles per second.What are the Ada rates for the metrics listed under "CP & Geometry" and "Pixel Pipe"?