I think a 64 or 128mb infinity cache certainly would have helped even the PS5.

No doubt, IC is a bandwidth saving feature. It will eat up die space, and its cost effectiveness with existing cache management on PS5 isn't clearly obvious. For example, stacking two bandwidth saving features doesn't mean you'll get a linear cost effectiveness.

This is the part where knowing PS5's & 6800/6900 i/o size would help determine how much if any amount of IC will be possible

You don't need to know that. I've shown you have a 290 sq mm die with no IC, and 15 sq mm free to fit in the aforementioned blocks. Also, you have not commented on any of my Multimedia logic queries as well.

We've discussed XSX SSD IO at 13 sq mm, and you've added an additional 5 sq mm to make 18 sq mm which you have incorporated into a 333 sq mm die. Removing 43 sq mm gives you 290 sq mm with zero IC.

You need to account for 15 sq mm before seeing how much IC too add. So, still missing is:

- SRAM for IO Complex

- Multimedia logic

- 2MB L2 cache

- Halved Command Processor, Geometry Processor and ACEs

After this, see if you have anything left. As I mentioned in my original post regarding die size, you don't have much wiggle room for a lot of SRAM. I know you are optimistic, but the numbers are in front of you - 15 sq mm still needs accounting for before IC.

Using 5700 i/o (~37mm) as baseline accounts for ~18mm2 that can be used for ps5 io after we halve navi21

We've discussed this already. You've added 5 sq mm to 13 sq mm for XSX SSD IO, making 18 sq mm incorporated in your 333 sq mm hypothetical die.

Btw isn't L2 included with phy/mc in rdna?

Well, you could ignore it, but I wouldn't be too sure. The Hotchips XSX die shot doesn't clearly show SRAM within the MC block. There is a higher resolution die shot that seems to show cache structures within the MC block.

Well that's true to a large extent, it's practically what the Cache Coherency Engines are for; pin-point eviction of stale data in the GPU caches. I'm still curious if it's demand scrubbing or patrol scrubbing;

@3dilettante had a pretty detailed post in one of these threads that went into cache scrubbing and the benefits (and drawbacks).

I'm also curious if these also affect the CPUs cache, as Cerny's presentation only block highlighted GPU caches.

Just going off the little I know I'd assume Sony are using demand scrubbing because patrol scrubbing waits on idle periods, but I don't see why you'd want to have intentional idle periods on a gaming device where you want components being busy with work at all times.

You won't see 100% utilisation in a gaming device, so idle time is expected. PS5 does have variable clocks to adjust for any idling and conserve power if required.

Could they have maybe used PS-RAM instead? Dunno how good the bandwidth and latency is on top-end PS-RAM off-die chips, but I've seen nice enough sizes (32 MB, 64 MB etc.) go for relatively pretty cheap prices on bulk through wholesalers, and those are small bulks compared to what a console would be buying quantity-wise.

Gamecube used 1T-SRAM, so there are other options.

Yeah, if it ain't broke don't fix it. That said, it's just a bit odd they couldn't be asked to wait for AMD on IC, if they have already apparently waited on them for so many other things for full RDNA2 compatibility. Though that could maybe ask the question of what is "full" in the first place, but different conversation. The thing is they did kind of go wider with Series X but it's actually still narrower than One X (384-bit).

Cache is meant to be transparent in hardware, and 'full RDNA2' is just a marketing term. XSX is more a fulfilment of DX12 Ultimate.

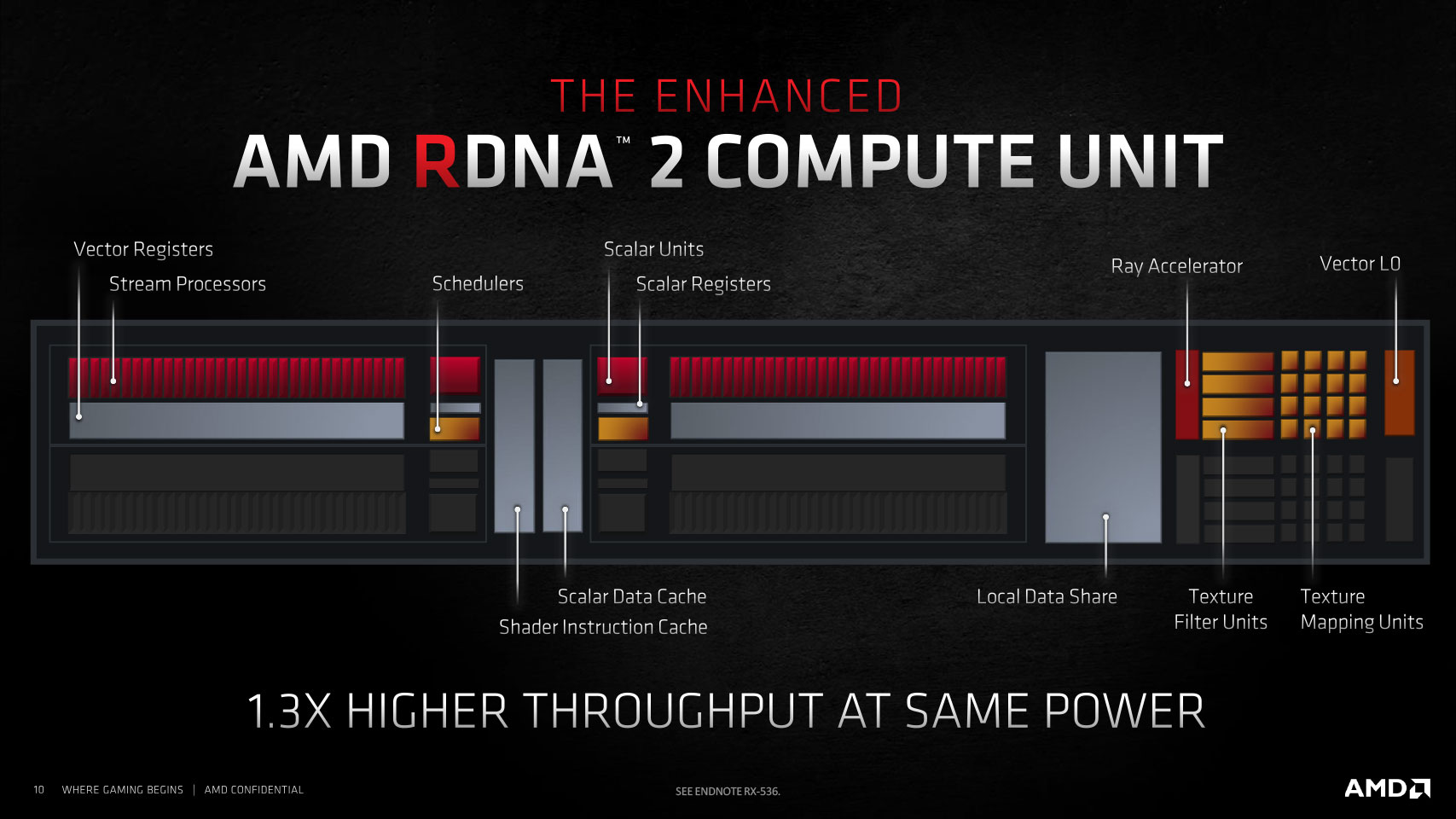

This should also (thankfully, hopefully) also indicate that the frontend has been improved greatly for RDNA2, because that'd be an absolute requirement to make such a strategy even work (in addition to other improvements too of course).

I'm not convinced XSX frontend is RDNA2 specification. You can check the Rasteriser Units in the Hotchips block diagram - there are 4 and each Raster Unit is at the Shader Array level. Compare to Navi21, the Raster Units are at the Shader Engine level.

Also, check Navi21 Lite in the driver leaks (aka XSX GPU), and its driver entries show no change to SIMD waves (CUs) from RDNA1 and Scan Converters/ Packer arrangements from RDNA1 as well.

Yeah, like deferred rendering. It's crazy that Dreamcast was one of the first (probably the first) gaming system with it at the hardware level, but it's not seemingly become a thing baked into consoles again until PS5 and Series, mainly because of AMD's work nowadays. At least, that's my understanding of it. Not that AMD have a literal deferred rendering feature, just something analogous to it.

FYI, PowerVR existed on PC before Dreamcast. Smartphones also use/ used TBDR. And a handheld console, PS Vita also used TBDR and a PowerVR GPU.

Interestingly, Mark Cerny was involved with the Vita, and there are hints that PS5 has analogies. I haven't found anything concrete yet of a TBDR architecture.