I don't think I'm alone in preferring the flashy lights, fancy mirrors, etc..

Definitely not. I can't wait to see RT in CB 2077, it seems like the perfect title to show it off.

News from hothardware:

The answer of the rasterizer let me get more ??? This is teh answer from HotHardware from AMD Scott Herkelman:

Someone should ask them why they chose to go from 1 primitive unit per SA to 1 per SE while in the consoles they kept them at 1 per SE.

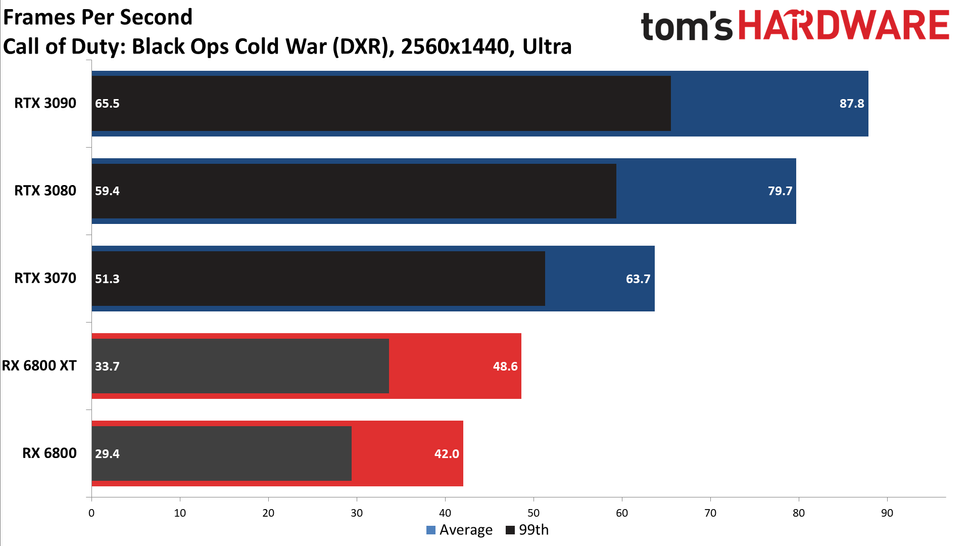

In today's world I think it's better to have better rasterization than RT since the number of games with RT are very limited and the number of games with useful RT are like unicorns. And all of the "but future proof!" is no sense to me. Nvidia is barely making it in tern of performance and AMD is barely barely making so I don't think today's GPU will be able to use full RT in next gem games.

I'm not sure I agree with this. Most games releasing on the new gen consoles use some level of RT which means that the majority of PC games from now on are likely to include RT. It may soon become the case that benchmarking without RT is as relevant as benchmarking without AA 10 years ago.

I really have no idea why we have RT GPUs...*at least* we are 1 gem away from truly usable perf. and the space on Die could be use to have faster and better GPUs capable of more "traditional" effect that would be more useful than slightly better shadows that eat 20-30-50% of the frame rate just so you can "admire" the difference in a static pic in a side by side comparative. Ridiculous...

But when you look at the difference it makes in Miles Morales on relatively anemic hardware there's clearly an argument for it, especially on high end Ampere GPU's which likely have 3-4x that level of performance at least. Personally I do agree that shadows are a questionable use of the technology given the level of performance it seems to suck up, although I'd need to see a more detailed analysis to finalise that conclusion. But the reflections in Watch Dogs for example are transformative IMO.