and next gen TAA that delivers potentially quite big improvements (UE4's new one already equals DLSS 2.0 more or less)

This seems like a bold claim. I'd be interested in checking out the analysis that has lead you to this conclusion.

and next gen TAA that delivers potentially quite big improvements (UE4's new one already equals DLSS 2.0 more or less)

AMD's Ray Accelerators take the definition of a ray and works out if the ray passes either:The ray tracing units on Navi21 are doing the denoising too ?

Denoising can help bridge the gap between a low sample per pixel image and ground truth by reusing previous samples through spatio-temporal reprojection - adaptively resampling radiance or statistical information for importance sampling, and using filters such as fast gaussian/bilateral filters or AI techniques like denoising autoencoders and upscaling though super sampling.

While denoising isn't perfect as temporal techniques can introduce a lag in radiance and any filter will introduce some loss of sharpness due to it attempting to blur the original image, guided filters can help maintain sharpness, and adaptively sampling or increasing samples per pixels for each frame can make the difference between denoised and ground truth images negligable. Still, there's no substitute for higher samples per pixel, so experiment with these techniques with different sample per pixel (spp) counts.

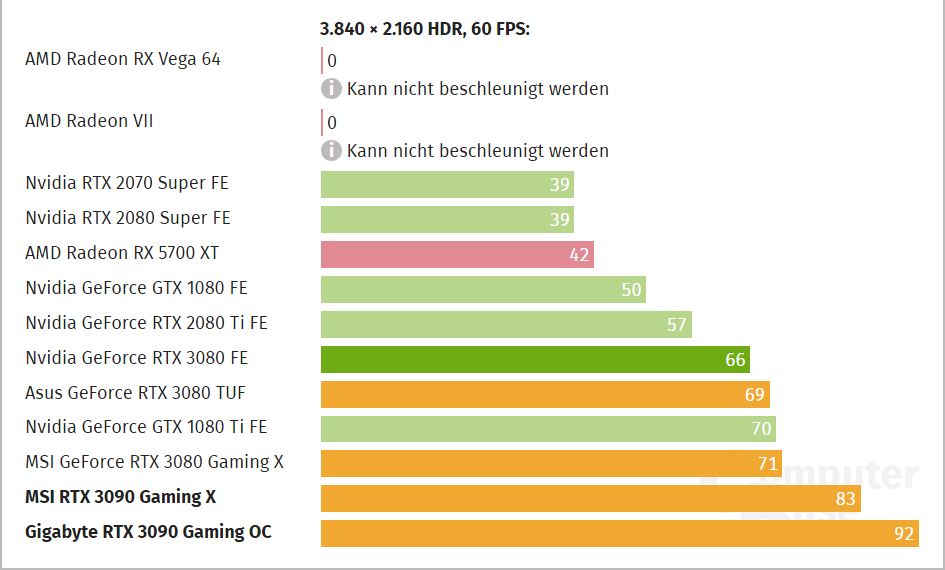

If i see these benchmarks we can say we are havely frontend bound. Nvidias high Shader count can not be filled with work because the frontend is to small for this. As more and more Pixel take in account (4k) as more and more nvidia is coming clother. I think if we have 8k benchamarks Nvidia will rule about AMD because of Shaderr power.

Will be nice to see 8k benchmarks on AMD and NVidia cards with heave shader effects on and off. If shadereffects off AMD will run circles with it's polygons and rops output because of higher pixel througouthput. If you now activate all shader effect the situation will turn and Nvidia is running circles around AMD.

If i see these benchmarks we can say we are havely frontend bound. Nvidias high Shader count can not be filled with work because the frontend is to small for this. As more and more Pixel take in account (4k) as more and more nvidia is coming clother. I think if we have 8k benchamarks Nvidia will rule about AMD because of Shaderr power.

Will be nice to see 8k benchmarks on AMD and NVidia cards with heave shader effects on and off. If shadereffects off AMD will run circles with it's polygons and rops output because of higher pixel througouthput. If you now activate all shader effect the situation will turn and Nvidia is running circles around AMD.

That can be true in general, but not particulary when talking about Ampere. GDDR6X requires more power and offers higher bandwidth. But if your product isn't able utilize all the bandwidth, it just consumes more power and becomes less power-efficient. Look at RTX 3070 with GDDR6 and RTX 3080 with GDDR6X. The later has 70 %(!!!) higher theoretical bandwidth than the former, but offers only 26-32 % (1440p, 4k, ComputerBase) higher performance. RTX 3080 has the same number of ROPs as RTX 3070, slightly lower boost clock (so fillrate is probably lower for RTX 3080) and the bandwidth cannot be efficiently utilized. But the GDDR6Xs run at full speed and consumes more power.

eTeknix leaked a new bench on Facebook. I don't see how anyone can argue against this, it's clear AMD is way ahead.

If i see these benchmarks we can say we are havely frontend bound.

@trinibwoy if we look at ampere, ampere have a huge shader amount and can utilize theme barly. AMD has 50% less shader than turing but performances at the same performance level. Even if you take the clockspeed in condideration AMD have only 15% higher clock than ampere, this means the most power from AMD is coming from utilizen 5000 Shaders and that ampere have a huge lagg of utilizing its 10.000 shader.

So ampere is indeed frontend bound.

If its fillrate limit nvidia will not gain more and more power upwoards to 4k!unless it’s fillrate limited.

If its fillrate limit nvidia will not gain more and more power upwoards to 4k!

Fun fact the best jumps in generations we have seen, was when gpu manufacturer goges from high shader count design to low shader count design. Remember Pascal! This was the same siutation.

1. Memory bus is not idle during gaming.When a memory bus is idle, it does not consume (significant amount of) power. And the power spent for refreshing the dram arrays is not greater with gddr6x than gddr6.

@Scott_Arm GPus are piplines. At higher resolution you need less polygons to fill up rops. In low Resolution 1 polyogn is transfered into 4 pixel. At higher resolution the same poylgon is now transfered to 16 pixels. At higher resolution you are more and more rops bound than on lower resolution.

@Scott_Arm GPus are piplines. At higher resolution you need less polygons to fill up rops. In low Resolution 1 polyogn is transfered into 4 pixel. At higher resolution the same poylgon is now transfered to 16 pixels. At higher resolution you are more and more rops bound than on lower resolution.