I guess that might depend on how much you could win in the silicon lottery. I was under the impression, that things got a bit less variable after the introduction of finfet, but honestly haven't really made an effort to stay up-to-date with 7nm and beyond yet. Where there vastly different clock speeds in Navi10 with standard 7nm already?We know that these newest process nodes have fairly high variability in clocks/power - the silicon lottery only gets worse as time goes by.

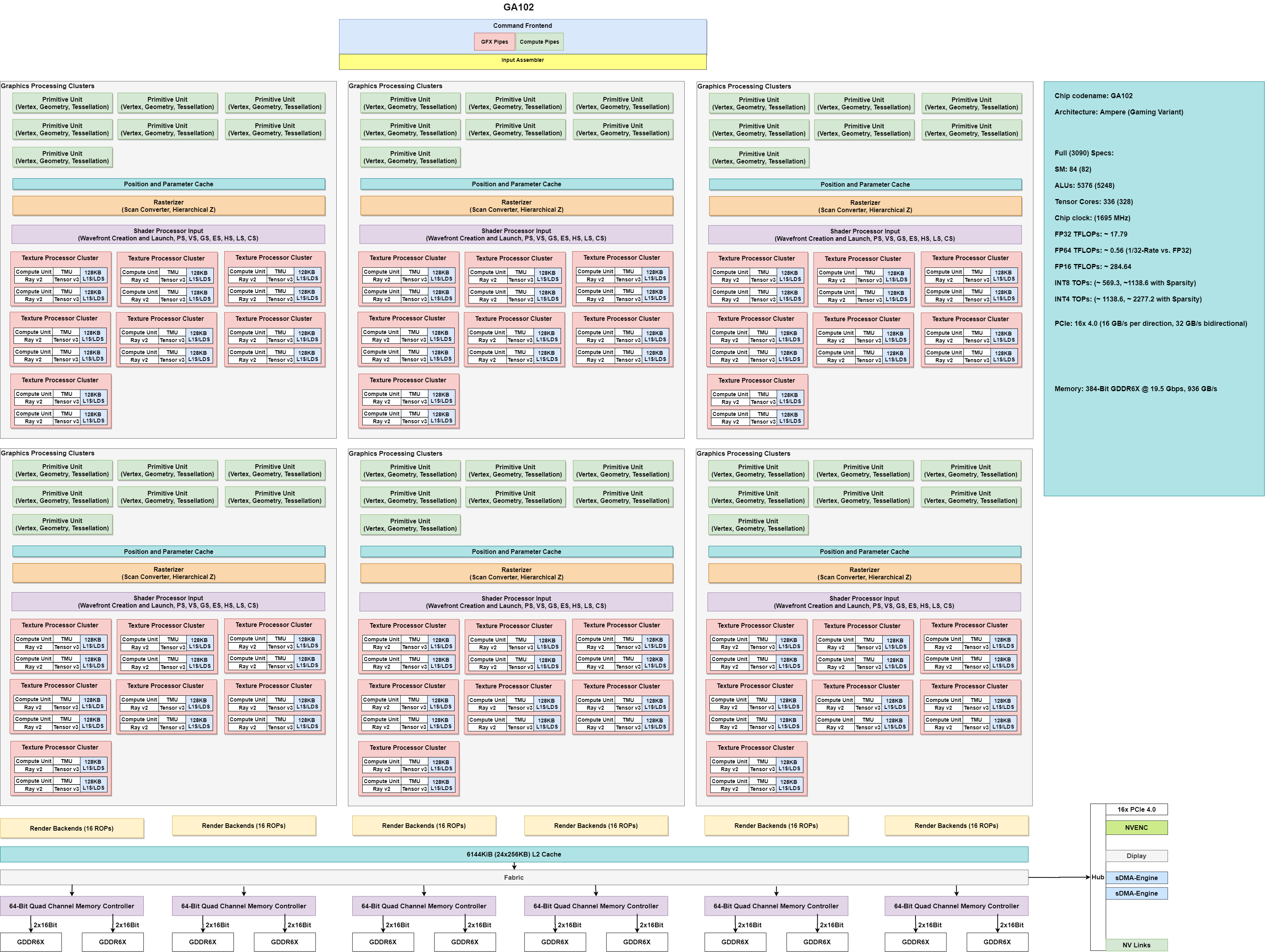

84SM + best silicon + ~8% better bandwidth?

If you're interested in a 3090, are you not going to wait to find out what the 3090Ti does? Or is that going to be the Titan, which gamers just ignore because $4000?

And frankly, if 3090 already has 24 GiB G6X and since GA100 has no tensor cores, I could see this round going without a Titan-branded card. Just a few pro-features via driver for SPEC Viewperf is narrowing the niche even more.