Anarchist4000

Veteran

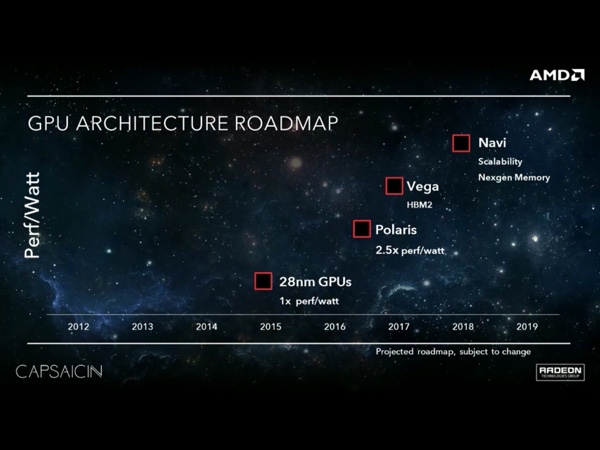

Not in disagreement here, but the question remains why performance is where it is.I can understand if you think I'm biased based on what I've posted in this thread, but in reality I am super disappointed with AMD. Based on everything they've been saying the last few months + using common sense and extrapolating from Fiji performance I thought there was no way they could fail this hard.

I get that, but I don't view the claims as outrageous for the reasons I listed. I normally run Linux, seeing large gains from drivers isn't exactly uncommon. I've been tracking AMDs commits with Vega for that reason. The 2MB page change, should only affect compute, was quoted at 10-15%, sometimes more, across most workloads. AMD has been working on those drivers real hard and that change is only now hitting git.BTW I only wanted you to cool it with the outrageous claims of dramatic future performance increases that may or may not appear.

Point being there are a few cases, FP16 being one, that I legitimately feel would put Vega close to Ti. The power issues are something weird, probably a result of driving all that SRAM too hard. Still no idea where that 45MB SRAM went, and it may be the most significant piece of the puzzle we've been overlooking. In compute, Vega is beating 1080ti in a number of tests, so performance is there. For graphics I'm still wondering about TBDR, not DSBR. Fully enabled, it would address Vegas current shortcomings and makes sense with some unexplained design choices. That or these chips were designed with giant APUs and low power in mind where they could do rather well.

Is that before or after the current gold rush ends? If miners bought all the cards at above MSRP, does current gaming performance or that in a few months matter more?There is, but when you're late like they are, by the time your performance starts looking favorable against the competition the competition will have introduced new products. They really aren't in a good place, ATM.

Going off some Linux driver commits related to their compiler, likely used for all shaders, they have some features temporarily disabled due to an unforeseen bug. Stating "would like to have", "disabled until issue resolved" so there are some significant issues lingering. That's in regards to VGPR indexing, so could be the bottleneck we're seeing.AMD probably knew this and couldn't produce the drivers that is actually able to bypass all the bottlenecks.