You probably need to balance efficiency vs having enough performance for enthusiasts to cycle out their older cards.

For example, I have absolutely no reason to replace by 980Ti with a 1080Ti if it's only 20% faster but 60% more power efficient. It means nothing. For me to spend money on new card, I rather they make it 60% faster and 20% more efficient.

While reduced power, cooling and all that is great to have, they take a backseat to outright performance at the enthusiast level. Since enthusiast level = highest margins, you need to ensure your performance bump is enticing enough for people to make the jump.

I think that leaves out various optimization points that are less consumer-focused but still important from an economic and practical standpoint.

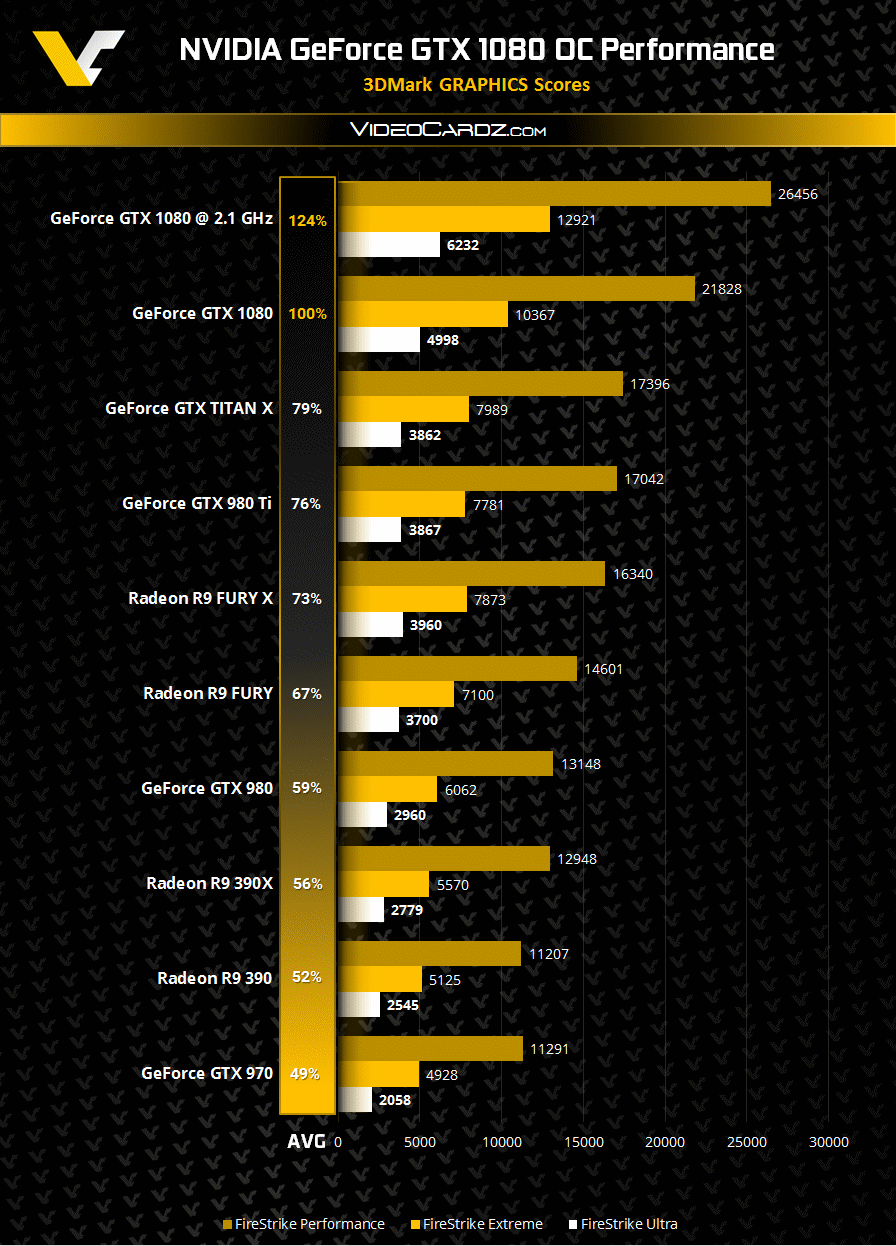

Nvidia's positioning of the product actually does make things closer to 60% faster and 20% more efficient, given that it's positioning as a successor to the GTX 980 (non-TI).

Area-wise, it's more in that range than the die area taken up by the 980Ti.

Did Nvidia label the 1080 a Ti? I thought it was non-Ti in the tradition of a someday mid-tier leader.

Risk-management, given the process uncertainties and unknown competition, would also encourage something where going all-out in area might be discouraged. One can push clocks and lose efficiency later in the game to position a product, but those mm2 get locked in much earlier along with any trouble in terms of cost/yield that might cause.

There's also a desire to leave some room to grow for what might be long-lived node.