Sorry to butt into this amazing round-and-round discussion (last few pages really are going round in circles, boys!), but isn't the point here not really PS4's fabled technical capabilities, but the fact that Sony will support (and finance?) projects that might not use traditional rendering methods, like Dreams? Just like they seem to have supported other seemingly crazy ideas in the past, to various degrees of success?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Could PlayStation 4 breathe new life into Software Based Rendering?

- Thread starter onQ

- Start date

- Status

- Not open for further replies.

That's not what Shifty and HTupolev are talking about though. You need to eloborate that 8x8 MIMD term. What I understand from the forth is results of GPU work can easily be handed off to the CPU. I'm guessing memory mapping changes here without any copy operation. Which is great and possible on consoles only.

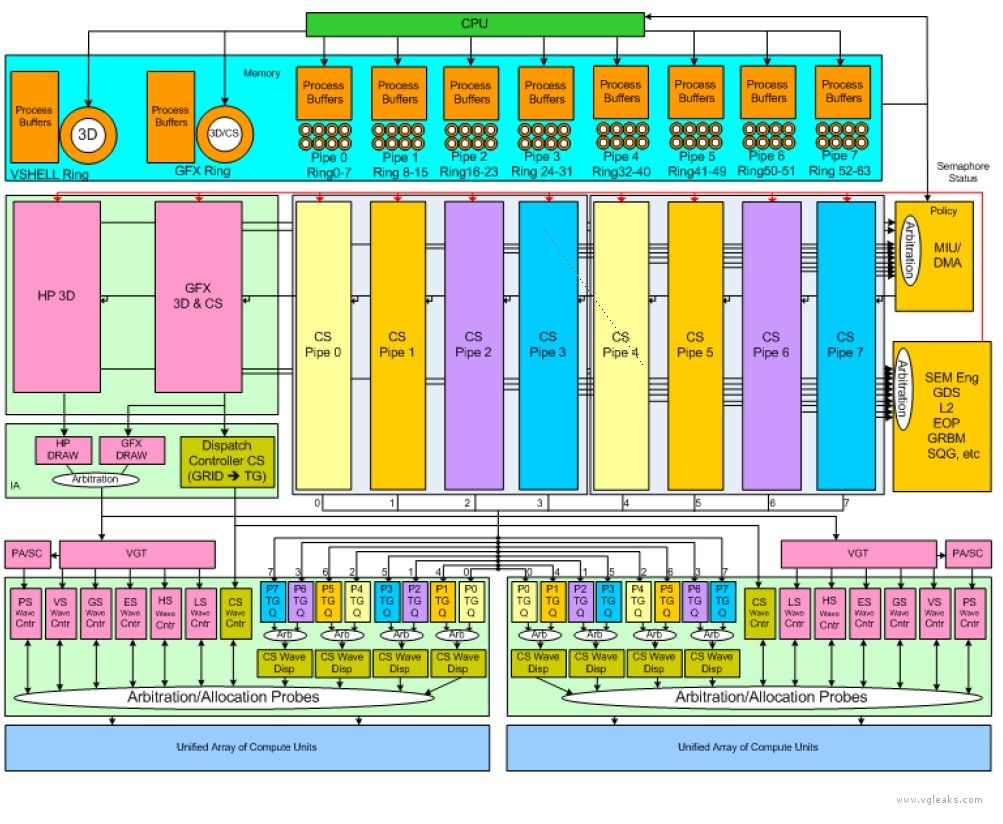

Just like the PPU handed off work to the SPU's, Jaguar is handing off work to the 8 compute pipelines.

If the PS4 8 compute pipelines isn't MIMD, what is it?onQ, your usage of the term MIMD is incorrect. I think if you use correct terminology you'd be better able to express your thoughts and it would lead to better discussion.

If the PS4 8 compute pipelines isn't MIMD, what is it?

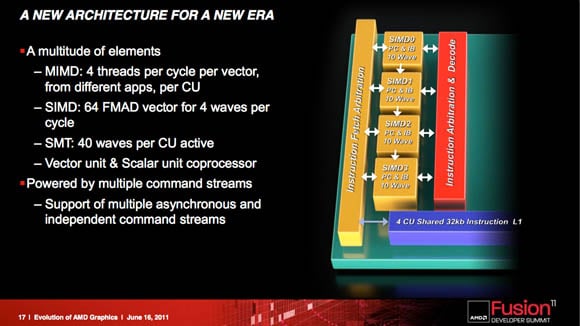

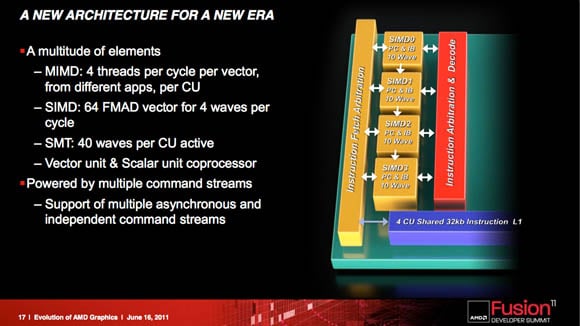

AMD GCN CUs each have 4 SIMD vector ALUs that share an instruction decoder. I don't believe any GPUs are considered MIMD architecture at this point, but I could be wrong. I'm not an expert.

AMD GCN CUs each have 4 SIMD vector ALUs that share an instruction decoder. I don't believe any GPUs are considered MIMD architecture at this point, but I could be wrong. I'm not an expert.

http://www.vrworld.com/2011/11/30/r...md-to-mix-gcn-with-vliw4-vliw5-architectures/

AMD is pushing forward with their Fusion System Architecture (FSA) and the goals of that architecture will take some time to implement – we won’t see a full implementation before 2014. However, Southern Islands brings several key features which AMD lacked when compared to NVIDIA Fermi and the upcoming Kepler architectures.

The GPU itself replaced SIMD array with MIMD-capable Compute Units (CU), which bring support for C++ in the sa

me way NVIDIA did with Fermi,

Graphics Core Next: A True MIMD

AMD adopted a smart compute approach. Graphic Core Next is a true MIMD (Multiple-Instruction, Multiple Data) architecture. With the new design, the company opted for "fat and rich" processing cores that occupy more die space, but can handle more data. AMD is citing loading the CU with multiple command streams, instead of conventional GPU load: "fire a billion instructions off, wait until they all complete". Single Compute Unit can handle 64 FMAD (Fused Multiply Add) or 40 SMT (Simultaneous Multi-Thread) waves. Wonder how much MIMD instructions can GCN take? Four threads. Four thread MIMD or 64 SIMD instructions, your call. As Eric explained, Southern Islands is a "MIMD architecture with a SIMD array".

Interesting.

Reading up on it, their concept of MIMD is if you were on a PC and had multiple programs executing on the GPU at the same time. Each thread would be a different application or game. AMD seems to have an SMT architecture, where wavefront is made up of SIMD instructions. You can occupy the GPU with multiple wavefronts, but it's not a MIMD approach.

All of that said, every GCN GPU is essentially equipped the same way, with ACEs and CUs etc. The programming model for all of them is the same, whether it's PS4, Xbox One, or PC. The hardware is exposed with different APIs. In terms of ACEs and wavefronts, there is nothing particularly different about the PS4 GPU.

I guess you could say the hardware is powerful enough to give decent visual results at an affordable price. Xbox One may as well, and maybe PC as well. I'd more likely give a lot of credit to Sony for actually funding a studio to do research on the subject, rather than enabling it through hardware.

Interesting.

Reading up on it, their concept of MIMD is if you were on a PC and had multiple programs executing on the GPU at the same time. Each thread would be a different application or game. AMD seems to have an SMT architecture, where wavefront is made up of SIMD instructions. You can occupy the GPU with multiple wavefronts, but it's not a MIMD approach.

All of that said, every GCN GPU is essentially equipped the same way, with ACEs and CUs etc. The programming model for all of them is the same, whether it's PS4, Xbox One, or PC. The hardware is exposed with different APIs. In terms of ACEs and wavefronts, there is nothing particularly different about the PS4 GPU.

I guess you could say the hardware is powerful enough to give decent visual results at an affordable price. Xbox One may as well, and maybe PC as well. I'd more likely give a lot of credit to Sony for actually funding a studio to do research on the subject, rather than enabling it through hardware.

The PS4 is 8x8 MIMD ( 64 threads).

The PS4 is 8x8 MIMD ( 64 threads).

You use terminology you don't understand. 3dcgi is an AMD employee...

ACE aren't execution units, they are scheduler.. The 18 CU are execution unit.

ACEs do not execute compute tasks.The PS4 is 8x8 MIMD ( 64 threads).

Compute queues aren't threads.

ACEs do not execute compute tasks.

Compute queues aren't threads.

ACEs don't execute compute tasks but the CU do.

You use terminology you don't understand. 3dcgi is an AMD employee...

ACE aren't execution units, they are scheduler.. The 18 CU are execution unit.

ACEs don't execute compute tasks but the CU do.

They use the terminology MIMD for CU not ACE...

The PS4 is 8x8 MIMD ( 64 threads).

Ok ...

So is Xbox one 2x8 MIMD (16 threads)? I don't think threads is the correct term to be used here.

What does seem fairly clear is that the execution model for GCN is SMT or SIMT, not MIMD. Each CU has 4 vector SIMD units that share instruction fetch/decode. It does have MIMD features, according to some slides, but that seems to be the case where multiple applications are sharing the GPU, say if you were browsing a website that used GPU resources to render 3D-graphics on a web-page at the same time that you were using a modelling tool or playing a video game. The SMT or SIMT model is multiple wavefronts, each wavefront being a list of SIMD instructions for the vectors units the wavefront is dispatched to. That's how games run on GCN. That's pretty clear from this forum, reading real devs posts. Search wavefront.

They use the terminology MIMD for CU not ACE...

I'm not using it for ACE I'm using it because the PS4 APU can run 8 compute pipelines, I never seen them AMD slides before.

Last edited:

You keep mixing up your points. You said you're not talking about HSA but GPGPU. Then you say PS4's APU is 8x8 MIMD because there's 8 CPU cores feeding 8 ACEs. One core can feed 8 ACEs. They are command buffers. It's all irrelevant because whether PS4's APU is described as MIMD or not, whether it has one core feeding 8 ACEs or eight cores feeding 2 ACEs, it's still not doing anything any other system can't do regards novel rendering techniques.I'm not using it for ACE I'm using it because the PS4 APU can run 8 compute pipelines, I never seen them AMD slides before.

You restarted this thread to a claim recent evidence proves you right. What specific techniques is it in games like Dreams that are enabled by PS4's architecture and are not possible on XB1 or PC? How does 8 cores (six available to devs) feeding 8 ACEs enable SDF splat based rendering on PS4 but XB1's 6+ cores feeding 2 ACEs can't use that technique?

Ok ...

So is Xbox one 2x8 MIMD (16 threads)? I don't think threads is the correct term to be used here.

What does seem fairly clear is that the execution model for GCN is SMT or SIMT, not MIMD. Each CU has 4 vector SIMD units that share instruction fetch/decode. It does have MIMD features, according to some slides, but that seems to be the case where multiple applications are sharing the GPU, say if you were browsing a website that used GPU resources to render 3D-graphics on a web-page at the same time that you were using a modelling tool or playing a video game. The SMT or SIMT model is multiple wavefronts, each wavefront being a list of SIMD instructions for the vectors units the wavefront is dispatched to. That's how games run on GCN. That's pretty clear from this forum, reading real devs posts. Search wavefront.

GCN is all 3 MIMD, SIMD, & SMT

You keep mixing up your points. You said you're not talking about HSA but GPGPU. Then you say PS4's APU is 8x8 MIMD because there's 8 CPU cores feeding 8 ACEs. One core can feed 8 ACEs. They are command buffers. It's all irrelevant because whether PS4's APU is described as MIMD or not, whether it has one core feeding 8 ACEs or eight cores feeding 2 ACEs, it's still not doing anything any other system can't do regards novel rendering techniques.

You restarted this thread to a claim recent evidence proves you right. What specific techniques is it in games like Dreams that are enabled by PS4's architecture and are not possible on XB1 or PC? How does 8 cores (six available to devs) feeding 8 ACEs enable SDF splat based rendering on PS4 but XB1's 6+ cores feeding 2 ACEs can't use that technique?

I called the PS4 APU a 8x8 MIMD because the CPU can basically use the GPGPU as a co-processor with the 8x8 command buffers. & yes that's HSA I already told you that the APU is a product of HSA.

Who said that it can't be done on a Xbox One or PC? what does that even have to do with anything? This is another one of your loopholes.

No, it's your thread title and opening question. You talk exclusively about what PS4 brings to software rendering, not modern rendering architectures. And go on to discuss PS4's architecture as being beneficial to software rendering. If PS4's architecture doesn't matter to your ideas, why are you raising PS4 as being 8x8 MIMD? If you accept it makes no odds and XB1 and PC are just as capable at novel rendering solutions thanks to GPU compute, why'd you ever raise PS4's APU architecture?Who said that it can't be done on a Xbox One or PC? what does that even have to do with anything? This is another one of your loopholes.

I repeat, if you just want to know what software rendering solutions will arise this gen, you need to ask the right (platform agnostic) question, probably in the Algorithms forum (although the console ecosystems will generally be more favourable to experimentation thanks to 1st/2nd parties, so there's some merit to basing the discussion in Console Tech).

No, it's your thread title and opening question. You talk exclusively about what PS4 brings to software rendering, not modern rendering architectures. And go on to discuss PS4's architecture as being beneficial to software rendering. If PS4's architecture doesn't matter to your ideas, why are you raising PS4 as being 8x8 MIMD? If you accept it makes no odds and XB1 and PC are just as capable at novel rendering solutions thanks to GPU compute, why'd you ever raise PS4's APU architecture?

I repeat, if you just want to know what software rendering solutions will arise this gen, you need to ask the right (platform agnostic) question, probably in the Algorithms forum (although the console ecosystems will generally be more favourable to experimentation thanks to 1st/2nd parties, so there's some merit to basing the discussion in Console Tech).

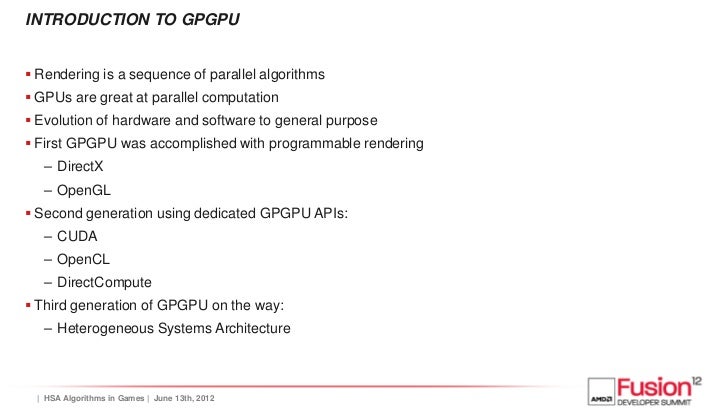

This is not the same GPGPU compute it's HSA GPGPU compute.

What is that even in answer to?!?! Are you talking about HSA compute? Is this thread "can HSA compute breathe new life into software rendering"? If so, 1) HSA has nothing to do with what devs are currently achieving with compute (eg. Dreams). 2) What do you mean here...?

In one breath you say it's nothing to do with HSA, and the next you say it's all about the HSA. And then you say it's nothing about HSA again. And then you're talking about PS4 specifically. And then you say you're not excluding other platforms!..., this has nothing to do with HSA but the PS4 APU is a product of HSA & the APU was designed in a way that made it better at computing. I simply asked what could be done using the PS4 for software based rendering seeing as it was designed with better GPGPU computing in mind.

onQ:

Let's start over because honestly your terms are mixed to me and I'm still struggling to find your argument beyond trying to explain "how" or "what".

Let me start you off:

GPGPU: general purpose computing completed by the GPU. Traditional GPGPU has code which copies the values from System RAM to graphics RAM and sends code over and the pointers to the data to the GPU to run it against. When the GPU is complete it needs to copy the value back to system ram.

Software Rendering: drawing graphics entirely on the CPU.

HSA: Integrated based architecture in which the CPU and GPU can access the same memory locations. Saves the Step of copying values back and forth for processing. in PC space for graphics this is often one way only. For GPGPU this will save 2 copies, one from DDR to GDDR and back.

Media molecule: looks like graphics algorithms performed entirely by compute shaders.

Okay, I think I nailed down the anchor points. Could you tell us

A) why or what advantages software rendering has over fixed function programmable pipeline ones? There are some if I recall but often not worth the trade off

B) using that advantage that software has over hardware, what aspects of PS4 HSA will help accomplish or exploit these advantages that previously were not possible.

Let's start over because honestly your terms are mixed to me and I'm still struggling to find your argument beyond trying to explain "how" or "what".

Let me start you off:

GPGPU: general purpose computing completed by the GPU. Traditional GPGPU has code which copies the values from System RAM to graphics RAM and sends code over and the pointers to the data to the GPU to run it against. When the GPU is complete it needs to copy the value back to system ram.

Software Rendering: drawing graphics entirely on the CPU.

HSA: Integrated based architecture in which the CPU and GPU can access the same memory locations. Saves the Step of copying values back and forth for processing. in PC space for graphics this is often one way only. For GPGPU this will save 2 copies, one from DDR to GDDR and back.

Media molecule: looks like graphics algorithms performed entirely by compute shaders.

Okay, I think I nailed down the anchor points. Could you tell us

A) why or what advantages software rendering has over fixed function programmable pipeline ones? There are some if I recall but often not worth the trade off

B) using that advantage that software has over hardware, what aspects of PS4 HSA will help accomplish or exploit these advantages that previously were not possible.

- Status

- Not open for further replies.

Similar threads

- Replies

- 10

- Views

- 3K

- Replies

- 90

- Views

- 13K

- Replies

- 2K

- Views

- 190K

- Replies

- 89

- Views

- 19K

- Replies

- 0

- Views

- 10K