Alucardx23

Regular

This might be a stupid question, but here I go. Taking this comment from the VGleaks article about Durango display planes.

“The GPU does not require that all three display planes be updated at the same frequency. For instance, the title might decide to render the world at 60 Hz and the UI at 30 Hz, or vice-versa. The hardware also does not require the display planes to be the same size from one frame to the next.”

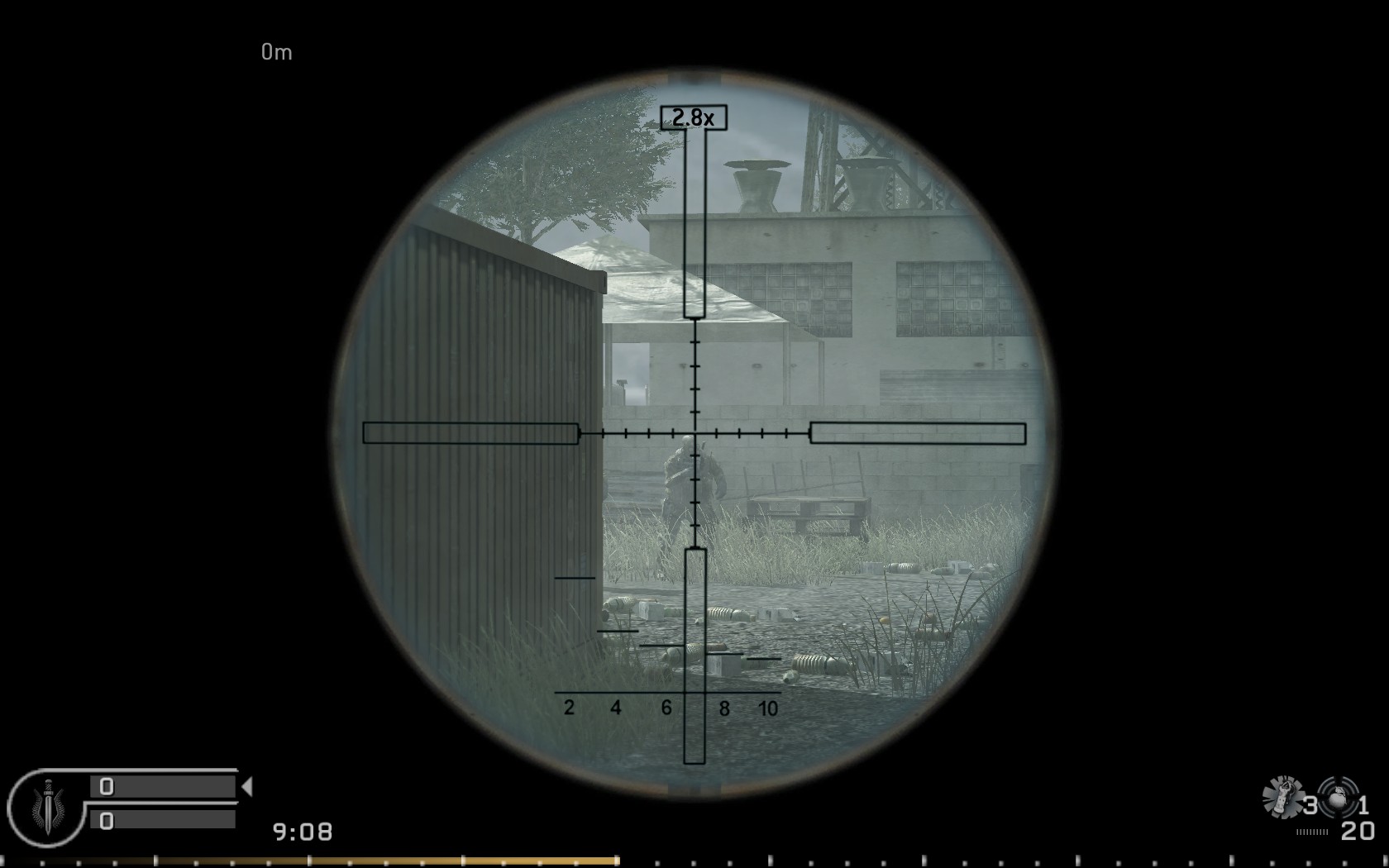

Could this be used to decouple gameplay framerate from game framerate? Taking a first person shooter as an example, could the first plane consist of the gun and hud running at 60 frames while the rest of the game on the second plane runs at 30 frames, or as high as the game can display it? This would mean that the game response would not be affected by framerate slowdown, at least not as much as in a traditional game where all is running on the same frame buffer. I use a first person shooter as an example, but I think this could be used for any type of game.

http://www.vgleaks.com/durango-display-planes/

“The GPU does not require that all three display planes be updated at the same frequency. For instance, the title might decide to render the world at 60 Hz and the UI at 30 Hz, or vice-versa. The hardware also does not require the display planes to be the same size from one frame to the next.”

Could this be used to decouple gameplay framerate from game framerate? Taking a first person shooter as an example, could the first plane consist of the gun and hud running at 60 frames while the rest of the game on the second plane runs at 30 frames, or as high as the game can display it? This would mean that the game response would not be affected by framerate slowdown, at least not as much as in a traditional game where all is running on the same frame buffer. I use a first person shooter as an example, but I think this could be used for any type of game.

http://www.vgleaks.com/durango-display-planes/

Last edited by a moderator: