itsmydamnation

Veteran

AMD accuses Nvidia of disabling multi-core CPU support in PhysX API

execpt that wasn't what was said was it?

keep looking im sure you will find your proof, or whatever your looking for, someday, maybe............

AMD accuses Nvidia of disabling multi-core CPU support in PhysX API

We continue to invest substantial resources into improving PhysX support on ALL platforms--not just for those supporting GPU acceleration.

NVIDIA confirms my suspensions:

http://www.tomshardware.com/news/nvidia-physx-amd-gpu-multicore,9481.html#xtor=RSS-181

Huddy wasn't talking facts...

all the complaining about PhysX not maximizing CPU cores seems to completely ignore the fact that custom built physics implementations don't either. Maybe there's something to all this besides Nvidia's evil intentions to cripple CPU performance?

The difference is that with say Batman we have proof positive that the operations PhysX are doing are bottlenecking as long as it's running slower as with PhysX on the GPU and not maxing out the CPUs. We know it's relatively latency insensitive and trivially parallelizeable (it can run on a GPU after all). We don't know what the bottlenecks in those other games are.Just came across that. Seems to corroborate what ChrisRay was saying earlier. Demirug made an excellent point as well - all the complaining about PhysX not maximizing CPU cores seems to completely ignore the fact that custom built physics implementations don't either. Maybe there's something to all this besides Nvidia's evil intentions to cripple CPU performance?

Why is CPU utilization lower when PhysX is enabled? And why is CPU utilization so low at all? If the CPU is bottlenecking the PhysX calculations, shouldn't the increased load be pushing the CPU to its limits?

http://www.tomshardware.com/reviews/batman-arkham-asylum,2465-10.htmlWe're trying to get clarification from the developers at Rocksteady about this phenomenon--it's almost as though the game is artificially capping performance at a set level, and is then using only the CPU resources it needs to reach that level.

We don't know what the bottlenecks in those other games are.

Tomshardware got a bit puzzled on PhysX on CPU in their Batman review. Weird. Put software PhysX on high and the CPU utilization decreases instead of increases. Shouldn't PhysX use the CPU more and not less when you put PhysX on high on the CPU?:

Yes, that's exactly my point. Why is it that PhysX games qualify for such scrutiny yet other physics engines don't? There also aren't any examples of other physics engines doing particle simulations on the CPU with good performance.

Yeah that's really weird. I'm not one for conspiracy theories so it could be something as simple as PhysX putting a higher load on the memory subsystem - after all Nvidia did mention that SPH is much faster on Fermi due to better caching. Also, as Sontin pointed out the PhysX thread(s) could be starving other threads that are normally free to run.

Since physics is another one of those ridiculously parellel workloads it doesn't make sense for it to not be implemented on multiple cores and the perceived conflict of interest between Nvidias GPUs and CPU implementation simply adds fuel to the fire.

Agreed, I just don't get why the benchmark for PhysX games is higher than for other games. I can't reconcile the uproar over PhysX with the fact that most entire game engines (including physics) do not scale past two cores. Take Crysis for example:

Easy enough to test, although I put the odds somewhere between slim and none, underclock the memory (without SIMD it's not going to be the cache). Don't have the game though.Yeah that's really weird. I'm not one for conspiracy theories so it could be something as simple as PhysX putting a higher load on the memory subsystem - after all Nvidia did mention that SPH is much faster on Fermi due to better caching. Also, as Sontin pointed out the PhysX thread(s) could be starving other threads that are normally free to run.

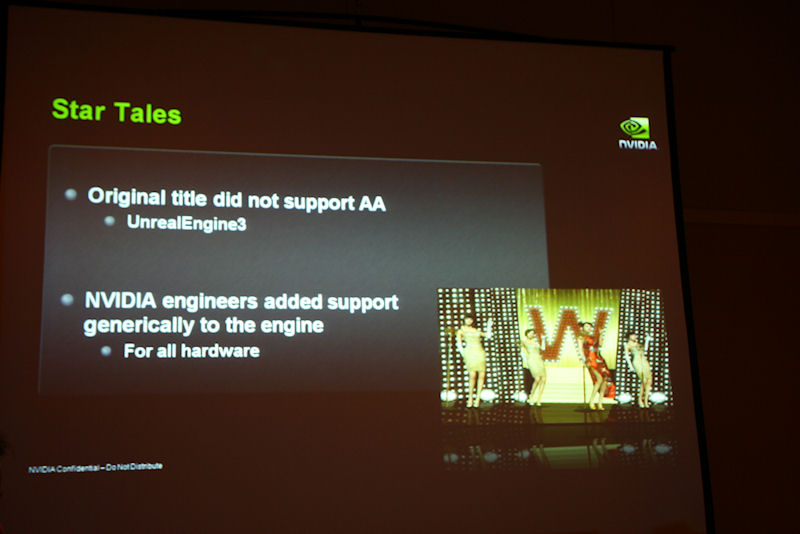

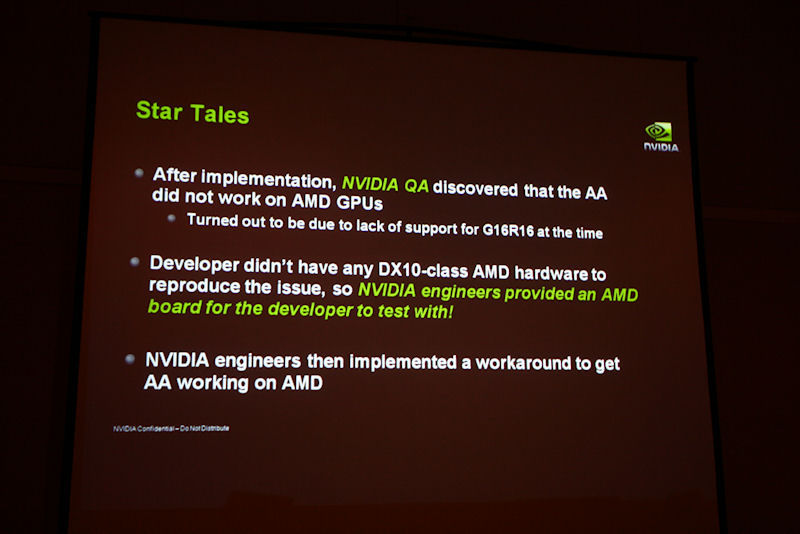

その実装はもちろんNVIDIAのGPUだけでなく、AMDのGPUにも有効なものだったのだが、開発者は中国でAMDのDirect3D 10対応GPUを入手できなかったため、NVIDIAが近所の小売店で買ったものを送ってテストさせたのだという。

Its an unfortunate situation, im trying to give Nvidia the benefit of the doubt here but theres a lot of doubt, especially when a game with so much cross vendor controversy like Batman: AA shows competing hardware as 100% PhysX limited.