Guess I just imagined them saying AMD FX-6300 with a GeForce GTX 760

Not when they said the experience would be better on a PC, that machine shouldn't hold a candle against a non premature Xbox One, which is a premature console.

Heck, even PS4 is, look at the library of games... X1 in that sense is ahead but not by much.

They didnt use a "powerful pc"

They used different PCs, one of them was quite powerful, hence unfair.

Sorry but seeing as the video does not show the game running as good as possible on the pc. Eg: they limited the resolution to 1920x1080 how can you possibly make that claim.

and Digital foundry themselves disagree with you.

Its an £80 cpu and a £175 gpu total £255 you could complete the rest of the pc for not a huge amount more than the cost of an xb1. To say that the cost of the xb1 (£400) is marginal compared to the pc df used is laughable.

Granted but they did choose a pc that has bottom of the barrel sound hardware (aka onboard sound)

Alas, that's the sound most PCs have these days, Xbox One is much more capable than anything else sound wise, something that is always forgotten and pulled under the rug when these unfair comparisons are made.

Lets get something straight having more colours is not inferior, blame the game/system/monitor for not being able to handle the full range

I really hope your not pointing to that as an example of something good ? If the game needs 6 months work on it it shouldnt of been released.

Seems like your hurt the game is better on the pc so youve made a bunch of stuff up to defend the xb1.

If you are going to use a TV to play, Limited range is undoubtedly the best choice. If you are going to use a computer monitor it is a matter of preference. Still.. full Range sucks quite a bit.

Microsoft recommend on their Xbox.com site to use Standard Range, ‘cos for a TV it is best, and you will never have problems with that range.

https://support.xbox.com/en-US//xbox-one/system/adjust-display-settings

Color space

“We highly recommend that you leave the color space setting set to TV (RGB Limited). RGB Limited is the broadcast standard for video content and is intended for use with televisions.”

Why is it the best advice to NEVER use full range on a TV?

Limited range works on all televisions and basically almost all the video material you can see is created with Standard RGB in mind, usually the original format in which that video material was created. Moreover, many many TVS made in 2013 and 2014 don’t even support full Range.

That being said and since this is a tech forum, I shall explain what limited/full range are as I understand it.

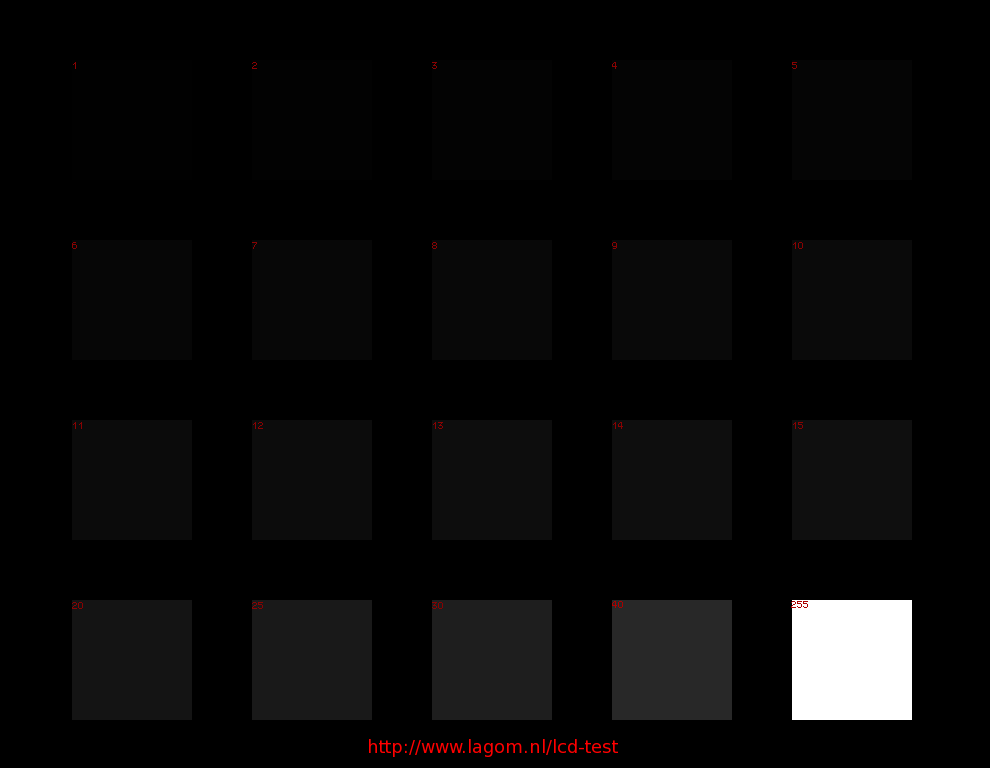

Limited range or Standard RGB and Full Range are two existing ways of defining the value of Black and White. The Full Range is set to 0 to 255. That is, counting 0 as the first step, there are 256 steps from black to white.

In contrast, Standard RGB –or limited RGB- features 37 less steps compared to full RGB , and absolute black to absolute white ranges from the values 16 to 235.

In other words, with Standard RGB the value of black is 16 , which is the first step. Absolute White Range for Standard RGB is placed in the step 235.

So why choose a limited range TV and what problems may arise if you don’t? First, basically movies , videos and all the material you see on DVD or Blu- Ray format is encoded in YCbCr and Limited range ...

Furthermore, the problem of choosing Full range on a TV that does not accept full RGB is that you would see values in typical "black" that should be gray instead. (eg the value 19-20-21 are almost black using Limited Range, where black starts at 16, BUT 19-20-21-etc steps are grey if you use Full Range ... etc)

This is an example of a full range image, represented step by step :

In the picture above you see that the step 20 , which is close to the step 16 -absolute black on Limited RGB-, should be black using Standard RGB, but it is gray instead.

BUT if you display this image on a Limited Range TV –steps 16 to 235- you should hardly see steps 15 and under. If that happens no worries, it’s not your fault, you are viewing a Full range picture on a Standard RGB/Limited Range display.

If in doubt always use Limited range and the image will look good to everyone regardless of the TV.

That’s why Full Range sucks so much, despite DF treating it as if it was the Holy Grail, which is not the way to go.