This collection of arbitrary data should be formatted in a defined way and should have a reasonable number of attributes per meshlet.

If some arbitrary data were compatible with the graphics pipeline, mesh shaders would not have been needed in the first place.

Any per-vertex/primitive attributes are only defined for the mesh shader's

output as clearly laid out in the

specifications. If you looked at the

DispatchMesh API intrinsic, there's nothing in there that states that the payload MUST have some special geometry data/layout. The only hard limitation is the amount of data the payload can contain. There's another

variant of the function without using task shaders where you don't have to specify a payload at all!

You can already use regular memory with the legacy geometry pipeline so how do you

think Mantle is able to render artist defined geometry without vertex buffers ?

There's a different rationale for the existence of the mesh shading pipeline. A combination of factors such as the variable output nature of geometry shaders made it difficult for hardware implementations to be efficient and features in the original legacy geometry pipeline such as hardware tessellation and stream out didn't catch on so the idea of mesh shading is a "reset/redo button" of sorts so that hardware vendors don't have to implement them ...

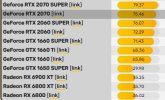

To what level are they compatible? While AMD's mesh shaders are compatible with task shaders, they have to use compute to emulate them and make a roundtrip through memory, essentially neglecting the main benefit of mesh shaders, which is to keep the data on-chip. In that case, compute shaders should also be considered perfectly 'compatible' with the rest of the graphics pipeline.

The difference between graphics shaders and compute shaders is that they both operate in their own unique hardware pipelines so there's no way for them to directly interface with each other in most cases. While AMD does have some emulation going on with task shaders, they do have hardware support to spin up the firmware to pass on the outputs to the graphics pipeline. Sure task shaders aren't integrated within the graphics/geometry pipeline in comparison to mesh shaders so whilst task shaders will likely bypass the hardware tessellation unit and it's undefined as to how stream out will behave in it's presence, the mesh shader stage alone can seamlessly interact with those features ...

The more powerful and flexible programming model wouldn't make a 'Cull_Triangles()' call difficult, right? In the same way, it doesn't make 'TraceRay()' any more difficult, so what's the catch then? Why is access to fixed pipeline blocks not exposed anywhere?

Culling has benefits

beyond just the mesh shading pipeline. The reason why PC can't have a unified geometry pipeline is simply down to API/hardware design limitations with other vendors ...

I think at this point, the benefits of Mesh Shaders for faster culling are crystal clear: cull at cluster granularity as early as possible and do finer-grain per-triangle culling if it's beneficial in the second pass (replace with free Cull_Triangles() on consoles). Should be an easy win, but it's not.

There's another reason why mesh shading is slower and it's due to the fact that there's no input assembly stage. On NV, there is a true hardware stage for it to accelerate vertex buffers and vertex fetching all of which can't be used for mesh shading. On AMD, they don't have any special hardware to accelerate vertex fetching so even before the introduction of mesh shading they had the most flexible geometry pipeline beforehand. They didn't lose a whole lot by creating a unified geometry pipeline. I can see

how mesh shading could be slower in many cases especially when one vendor is as intransigent about keeping their "polymorph engines" relevant as much as possible ...